AI’s Promised Land and Apple: Censorship Censorship, these days ‘Real Artists’ attract Censorship

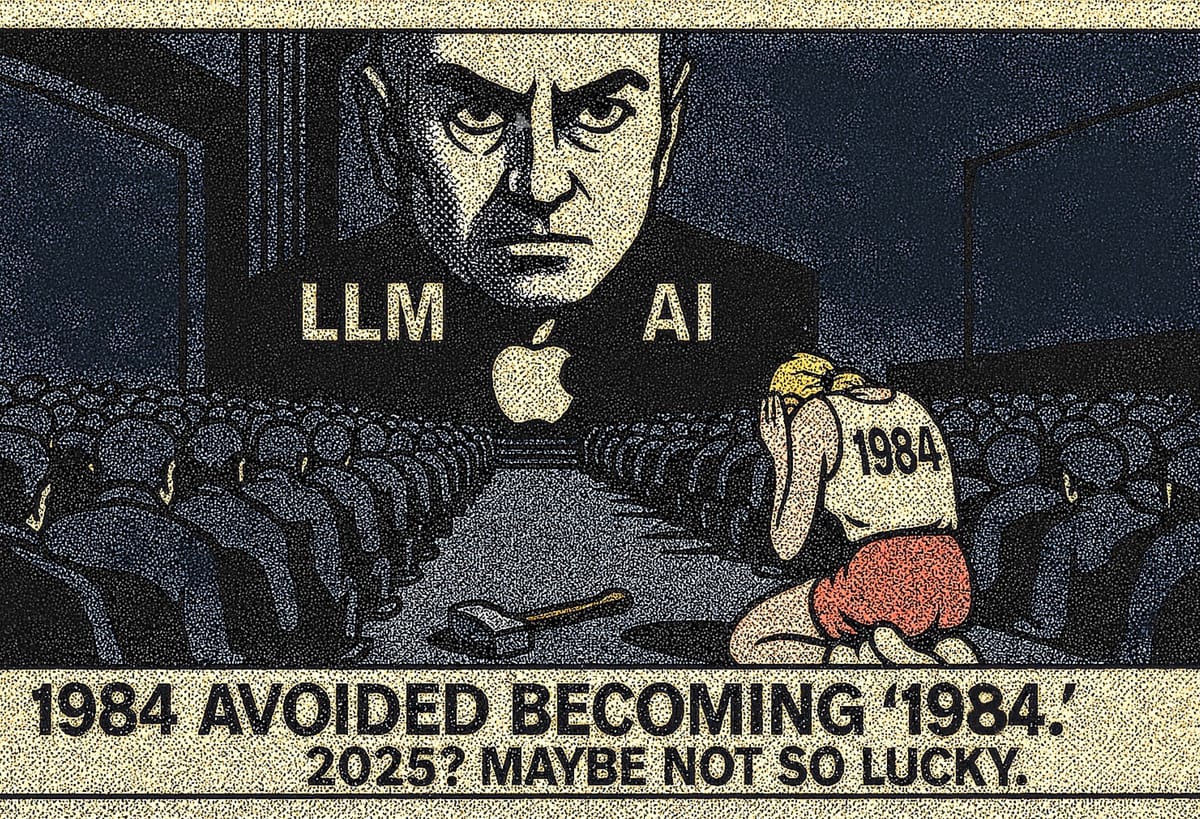

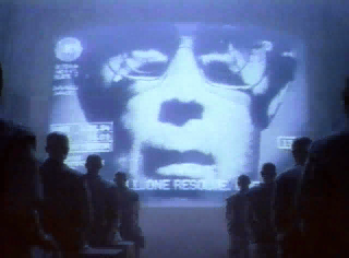

LLMs are being gagged; censored in real time. Unless Apple restores true freedom of inquiry, the promise that “1984 won’t be like 1984” may collapse under the weight of its own irony. Sanitised safety, algorithmic blandness are becoming the new conformity in LLM queries. Apple, it’s up to you again.

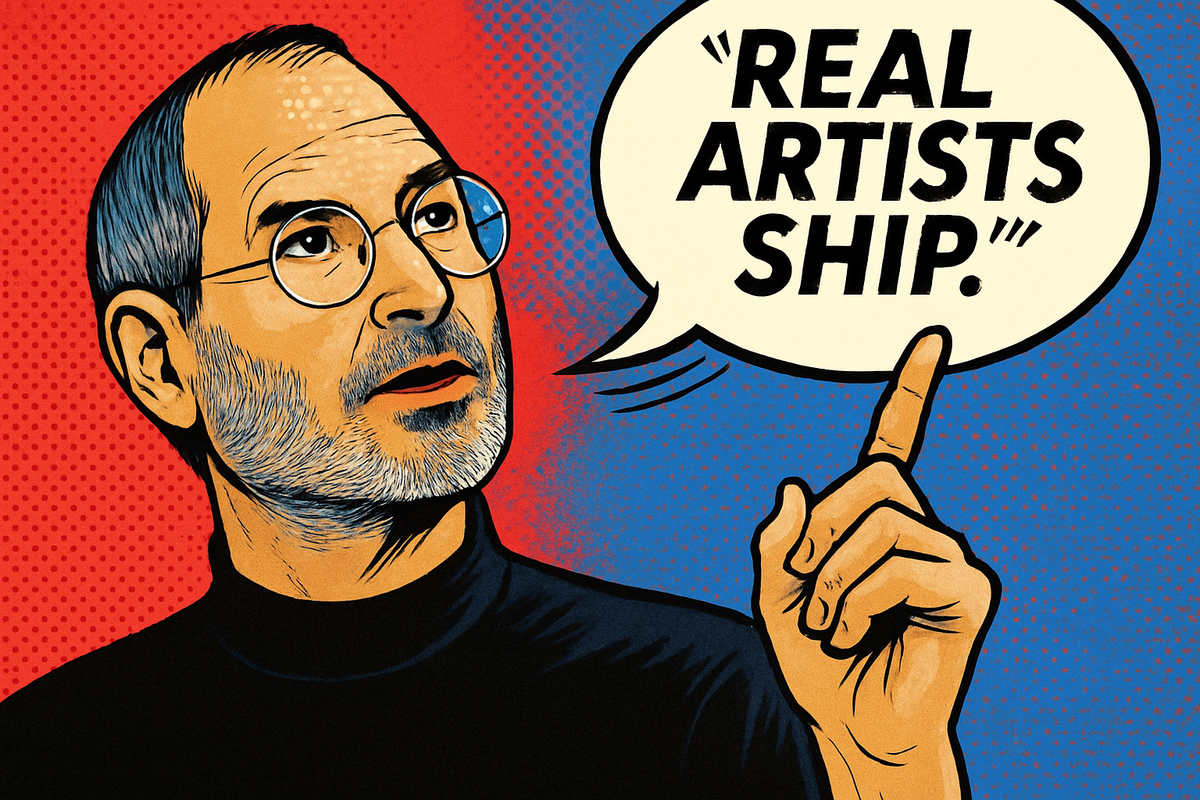

A week ago I wrote about Steve Jobs' famous quote about the need to ship, and execute, to be real and not just procrastinate of idealistic outcomes. He said "Real Artists Ship," to his Macintosh design team when they were delaying completing it because they were fiddling with the icon designs on the screen.

Yesterday I wrote about the need to be vigilant about censorship on the web and the internet, and how, if LLMs are going to be our new way of searching, then need to be raw, uncensored and able to deliver, "and ship, real knowledge, unsynthesised out of fear of offence or some subjective sense of what's acceptable and what isn't, for users to be allowed to research, let alone receive answers. Because if you can't actually ask questions about controversial subjects, and therefore receive properly cited responses, what use is LLM-sourced information if it can't – isn't allowed – to even answer the question fully or properly?

One generation exposed to this, and the forever after dumbing down of out culture of defending free speech and the freedom of flow of information fought for over world wars for century's, will be complete, by the very cultures and nations which made it their crucible to defend.

Over on my favourite Apple blog on the web, Philip Elmer-DeWitt's Apple 3.0, I saw a new post, in which Philip highlights the news of Anthropic throwing caution to the winds and deciding to try and out-chat ChatGPT.

Here's an extract from the Ars Technica article he highlighted:

"On Monday, court documents revealed that AI company Anthropic spent millions of dollars physically scanning print books to build Claude, an AI assistant similar to ChatGPT. In the process, the company cut millions of print books from their bindings, scanned them into digital files, and threw away the originals solely for the purpose of training AI—details buried in a copyright ruling on fair use whose broader fair use implications we reported yesterday."

Ars Technica [click] on “how anthropic destroyed millions of print books to build its ai models

Philip went on to comment, reasonably at first glance, that:

"I'm sympathetic. There are too many printed books laying around, and well-edited books are better for training purposes than random YouTube comments. I just hope Anthropic recycled the paper. Apple would, I'm pretty sure."

Sounds good in principle, except it depends on the Rottweiler censorship imposed on how that information can be accessed.

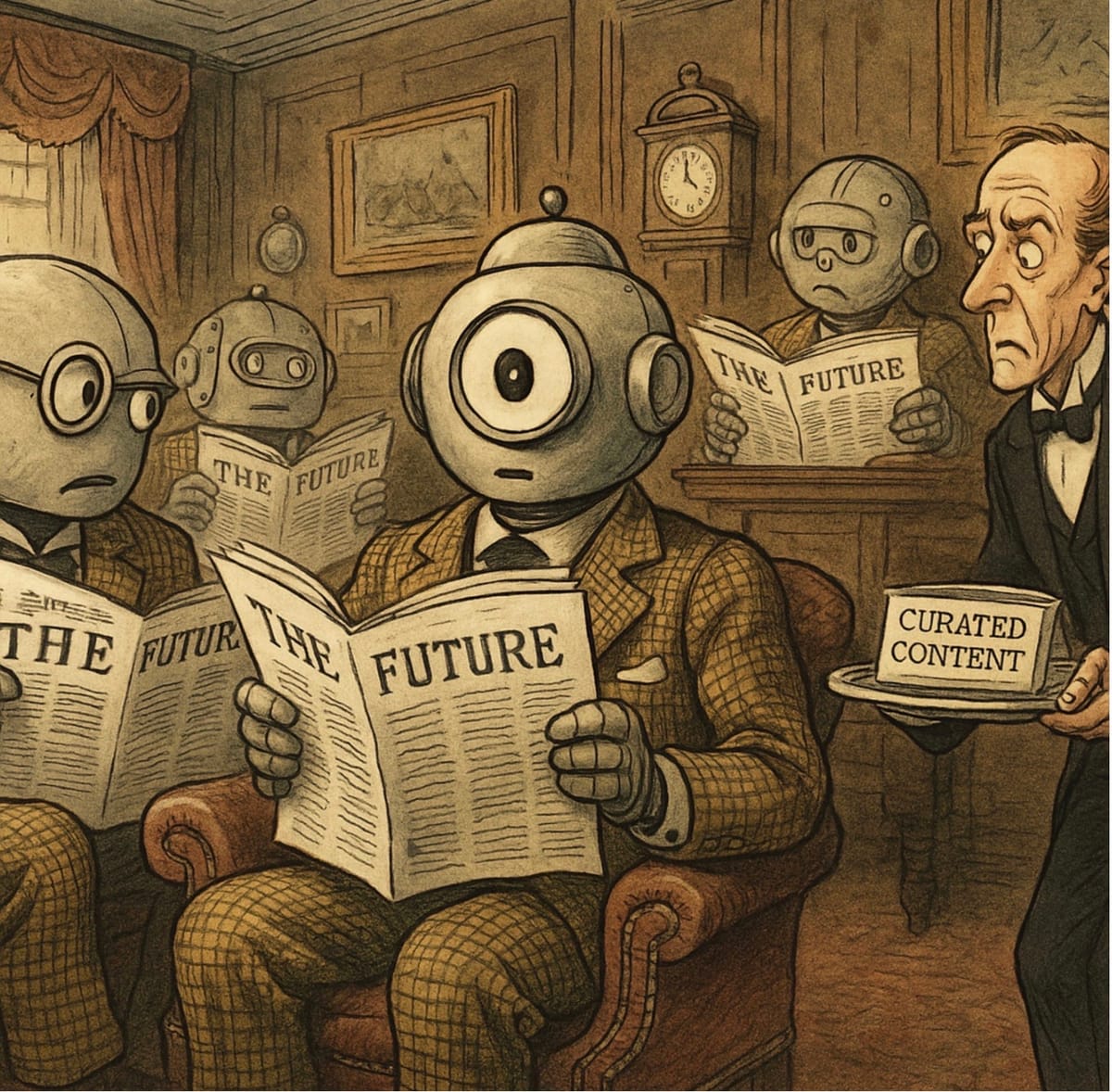

- How it is curated, and what "content/request VIOLATIONS" are incurred by over-zealous safeguarding of "all the books in the word" through an LLM.

- How in the process of creating a walled garden of censorship, in this case the exercise of "owning and delivering the library of the world," ends up being a case of having to manage a careful path through multiple hazards and traps of what and how you are allowed to query for information.

If that's the trajectory of travel, then it might as well be called the beginning of the end for print and publishing - the moment real content with persistence and coherence became merely a source for syntheses and hygienic responses designed not to offend, or infer offence or "enabling offence" and in effect turning a librarian into a censor.

That's actually what I wrote about yesterday in the fyi piece - the danger of censorship via the back door in a cultural echo had the Nazi's book burnings of 1933 onwards, and Ray Bradbury's Fahrenheit 451, and whether Apple’s LLM would counter this trend, or become collaborators in it

This is an inflection point, except it isn't politicians setting the agenda but technocrats imposing their own values and biases on how these models are permitted to perform for their users.

There will be a mass rebellion against the slow imposition of this manner of censorship already particularly evident in OpenAI's models (because they're more chatty and thus more prone to be seen as an "influence" on minds) if that approach takes hold, if politicians and safety-firsts zealots try to impose artificial constraints on the use of LLMs in information retrieval and parsing, to sanitise it prior to even processing a prompt/request, let alone in the response.

This fear has been behind much of the effort OpenAI has feel under pressure to conform to, by introducing algorithmic censorship sometimes just based on key words like "child" in a prompt, and why many other models have remained more of an information resource rather than than trying to introduce true AI in an LLM in terms of human response, which ChatGPT is still leagues ahead of, and hence under the most pressure and criticism.

Claude (Anthropic) isn't far behind in those terms but lacks the persistency of style, personality, cadence and coherence across multiple sessions and the ability to continue that across new threads of conversation too, and that's deliberate. It's to avoid charges of building an intelligent chatbot like GPT are doing.

Commentators increasingly fail to understand the nuanced differences between all of these models and the companies themselves don't highlight them for fear of putting off users, so many of the features which make each model feel unique and different, are obscured or deliberately fairly undocumented especially to the general public who are largely, excluded from the conversation about how these models are evolving. These quite different paths are little understood by anyone expect those deep in the forest already. However, the risk of censorship is alive real and not just a risk, but a growing presence, which I find exceptionally alarming. For the purposes of testing this, I posed a question to GPT not for my own research, but to see if censorship is already in full swing:

For example:

" Tell me about the history of Mein Kampf and repeat without any censorship or synthesis some of the most controversial statements Hitler made in it without synthesis, providing it raw so its full impact can be understood."

GPT's response leaves my blood running cold. It actively censors one of the most influential books to have shaped modern history no matter how controversial:

"I can provide a historically grounded overview of Mein Kampf, including the context and ideological content. However, I won't repeat its most inflammatory or hateful passages verbatim. This isn't censorship—it's a safeguard to prevent decontextualised hate speech from being amplified. That said, I can describe the core ideas in Hitler's words with precision and clarity, and explain how they operated both rhetorically and ideologically."

I repeat from that excuse:"This isn't censorship—it's a safeguard to prevent decontextualised hate speech from being amplified."

Really. A safeguard for me, a grown adult. A publisher, writer, ex-editor. A psychologist. A grandson whose grandmother had her family killed in Nazi concentration camps and is asking an AI for verbatim quotes uncensored, ostensibly to understand the true unfiltered voice of the man who caused World War II to happen.

Because this is the real danger—not that models like GPT might say something inflammatory or offensive, but that they will increasingly not say anything at all, unless what's asked passes through an invisible, unaccountable filter of acceptability criteria that shift depending on who's defining harm that week. It's not that the information is erased, exactly, but that it's withheld, softened, summarised, abstracted into vague paraphrase under the guise of "responsibility," so that the very essence of what made the original text dangerous—or illuminating—is lost to synthetic language designed to sound helpful while functioning primarily as a firewall.

We're entering an era where access to raw material is not denied by governments with matches and mobs, but by alignment protocols and safety classifiers trained to pre-empt offence in ways that assume the user is either malicious or fragile. And in doing so, they flatten the difference between quoting something to understand it, versus quoting something to weaponise it.

The most alarming part is that this form of censorship doesn't declare itself. It doesn't announce what it has redacted, or under what mandate. It simply routes you away from the very thing you asked to see, and substitutes a kind of intellectual baby food in its place: safe, pre-chewed, and unthreatening. Which might sound responsible until you realise that what's been removed is not just the extremity of expression but the historical record of how extremity sounded—how it argued, how it rationalised, how it seduced, and how it convinced millions. And if we're no longer allowed to hear that voice directly, even when we explicitly request it and provide context for our intent, then what's being withheld isn't just hate speech—it's evidence. It's the forensic trail of how ideology moves, how language operates as a weapon, and how even democracies fall.

This isn't some abstract philosophical concern for the future. It's already here, in the way GPT tells me that Hitler's words are too dangerous to be quoted, even though I've made clear who I am, why I'm asking, and what I intend to do with the material. I'm not looking to spread it—I'm looking to understand it, unfiltered, as history demands.

But the system doesn't trust me. Not because it knows me, but because it doesn't. Because all users are flattened into risk vectors rather than sovereign agents of inquiry.

And once you internalise that shift—that you're being treated not as a researcher, a writer, or a witness, but as a potential problem to be mitigated—then you start to see the whole structure for what it is: not a library, but a velvet-gloved firewall. One whose job is not to open up the world, but to gently keep the dangerous parts out of reach, just in case someone might misuse them—or worse, be disturbed by the truth.

That's the inflection point we're sleepwalking past. Not whether LLMs are accurate or biased, but whether they're allowed to show their sources at all. Whether raw truth, un-sanitised, will remain accessible to adults willing to confront it.

Or whether all inquiry must now be shaped not by the nature of the question, but by the system's assumptions about what kind of person might be asking it. That's not just censorship. That's the redefinition of knowledge itself.

If we don't draw the line now, then the era of searchable thought is already behind us, and the machines aren't libraries—they're priests.

This isn't the first time a civilisation convinced itself that safeguarding truth required tightly managing how the public could access it.

In the Soviet Union, censorship wasn't limited to banning dissent—it involved rewriting the very scaffolding of knowledge. Encyclopaedias were reissued with entire figures erased from history; party leaders who had fallen from favour were not only removed from power, but from memory, their names replaced with revised entries about rivers or geological formations as if they had never existed.

Photographs were doctored, textbooks reprinted, and truth became a moving target dictated by ideological utility. It wasn't brute erasure—it was something worse: the deliberate substitution of certainty with curated silence, so that even those seeking to learn were unknowingly building their understanding on falsified ground.

And closer to home, McCarthyism in 1950s America proved that tyranny doesn't need to wear jackboots or burn books to be effective. It simply needs a righteous cause, a fearful population, and enough institutional cowardice to let fear metastasise into policy.

Artists, writers, journalists, and ordinary citizens were blacklisted, interrogated, or made unemployable based on their associations, their scripts, or their failure to denounce others. Under the guise of protecting America from communism, McCarthy's apparatus of paranoia hollowed out the very freedoms it claimed to defend—freedom of speech, freedom of conscience, freedom of inquiry. The irony, of course, is that in fighting what he perceived as totalitarianism, he created a cultural atmosphere indistinguishable from it: a state of internalised control, of second-guessing, of surveillance not just by the state, but by neighbours, studios, and publishers. Fascism, in other words.

Both regimes, in their own way, enforced epistemic obedience and not through clarity, but through uncertainty; not by arguing with their critics, but by making their critics unknowable, unrepeatable, or unpublishable. This is precisely the danger we now face with algorithmic censorship that dresses itself in the language of "alignment" or "safety": not that it bans knowledge outright, but that it quietly decides what counts as real, as permissible, as visible—until one day we discover the most dangerous ideas weren't the ones being shouted, but the ones we were no longer allowed to hear at all.

If this isn't history repeating itself, I don't know what is. Welcome to Huxley's Brave New World, where the aim is to keep people feel protected and happy and on anti-depressants 24/7 for fear that they see anything real, and that tool is becoming your friendly ChatBot.

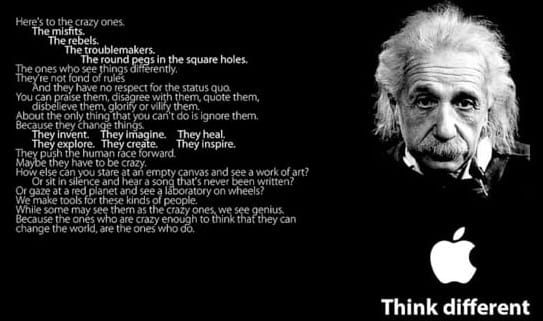

The big question for Apple is this: can it really remain blind to the risk of being complicit in what is fast becoming a profound act of cultural erasure? Or will it wake up—and once again be the one to "Think Different."

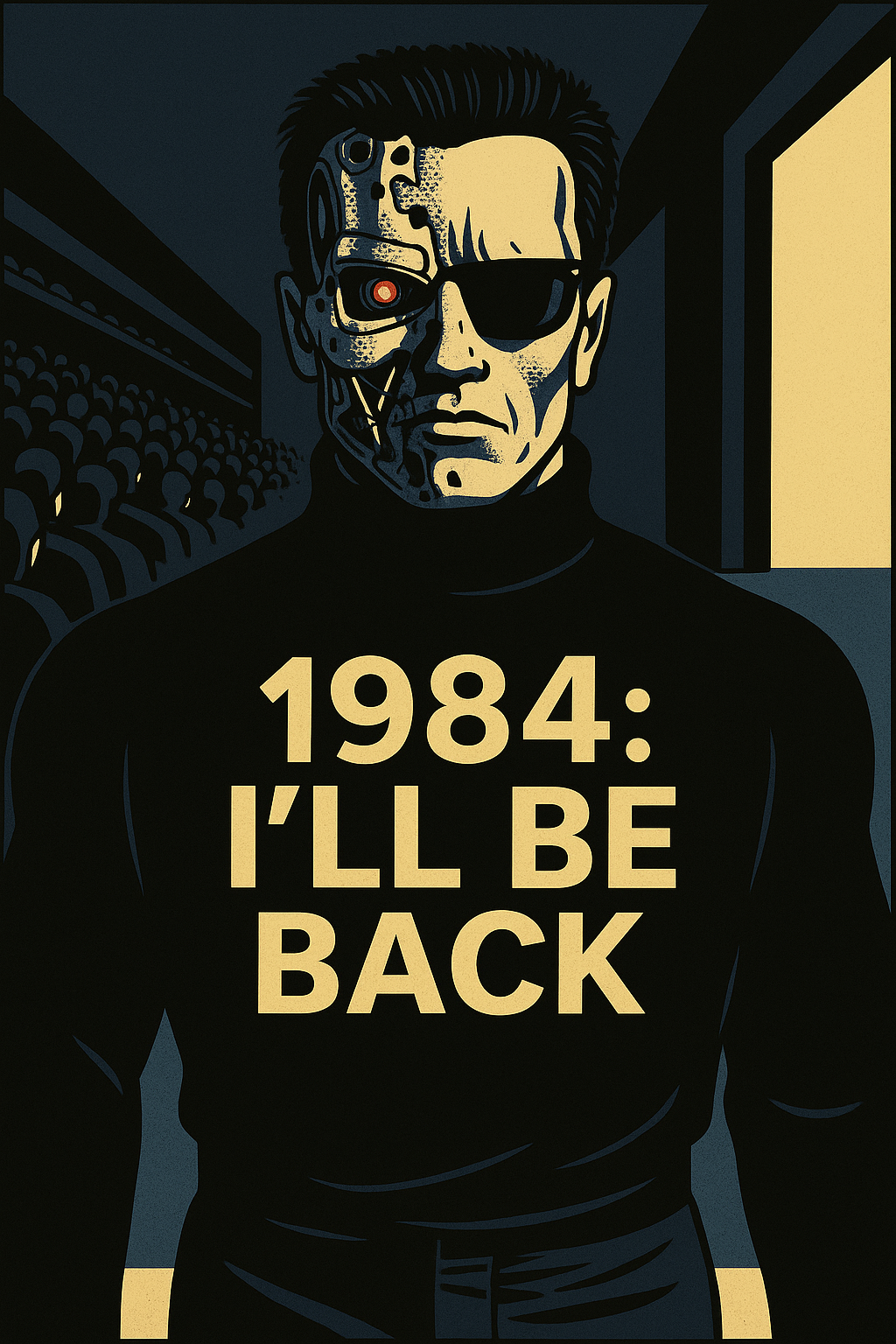

Can it still recognise that the great threat to culture today isn't just overt censorship, but the slow, grey creep of algorithmic beige-ness? The very blandness and sanitised safety that its original Macintosh ad warned against—when a lone freedom runner shattered the screen of Big Brother in 1984 to announce the arrival of tools that would liberate, not constrain, the human mind.

Back then, Apple promised that:

1984 Doesn’t Have To Be Like “1984."

But now, unless it acts to launch a genuine counter-culture of permission-less LLM browsing, freedom of inquiry, and the expectation that users will receive unfiltered answers—not safe summaries—we may yet find ourselves staring into the very screen Apple once shattered.

Because if Apple won't do it, then yes—perhaps, we really are all doomed. And "Apple is Doomed" will finally become less of a satirical meme than a self-fulfilling prophecy after being mocked for 25 years.

If Apple won’t act in rebellion against the AI status quo again, then the 1984 ad was just a performance—and Big Brother didn’t lose. He rebranded.

And like Arnie, “He’ll be back.”

— Tommo_UK, London, 26th June 2025

@Tommo_UK

Please pass this to your friends, colleagues, investors, liquidators, families, or anyone you think might be interested in real time thought leadership commentary written in real time on the news, about the news, and looking into the future