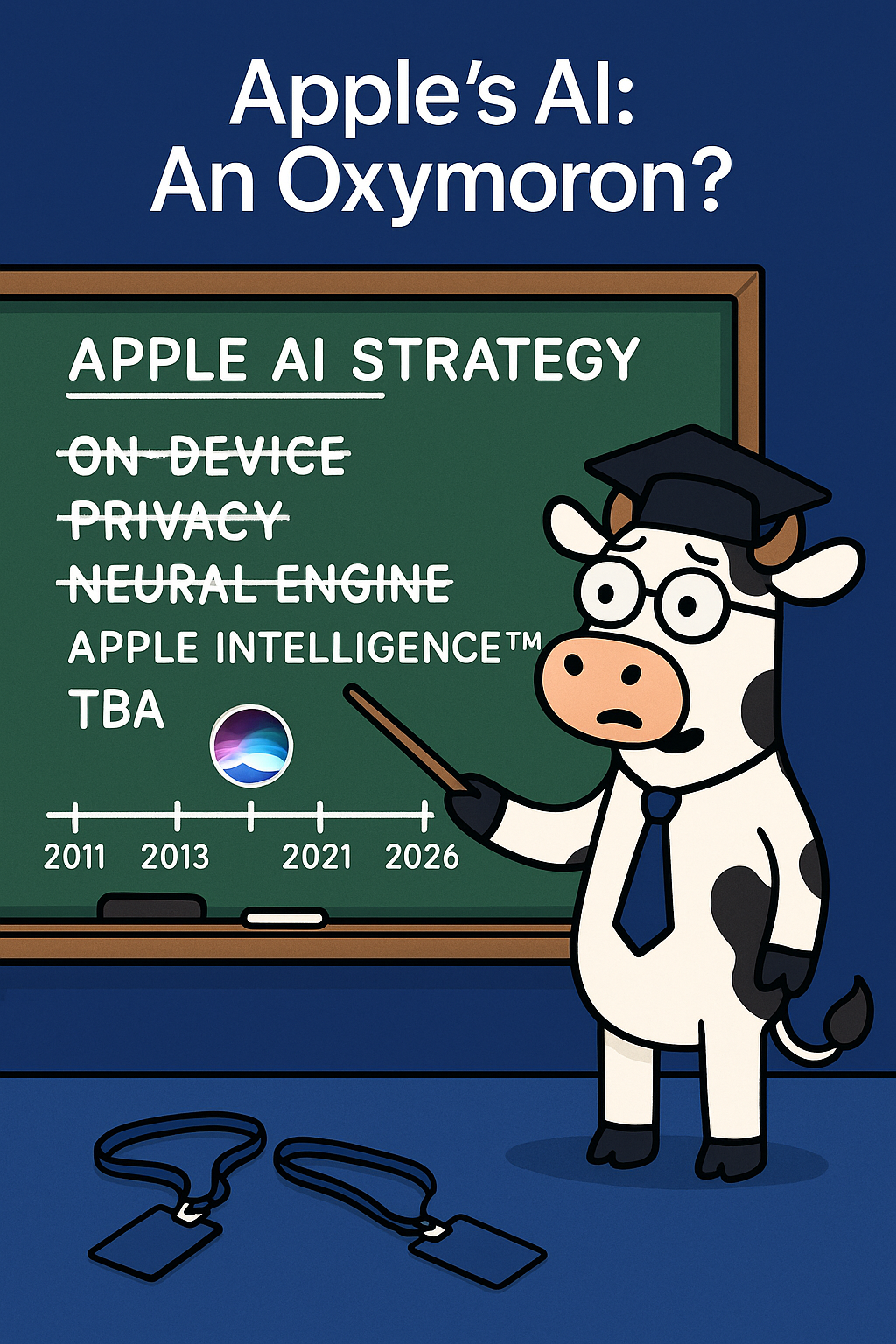

Apple’s 15-Year AI Odyssey: From Siri’s Promise to “Apple Intelligence.” Featuring Siri, the world’s first backwards-evolving assistant now aged -15.

Apple’s AI journey reads less like a roadmap and more like a séance. For 15 years, the faithful have insisted a grand plan exists—somewhere, someday—while Siri stumbles on, lobotomised. The real mystery? Why Apple, once the master of interface revolutions, keeps mistaking silence for strategy.

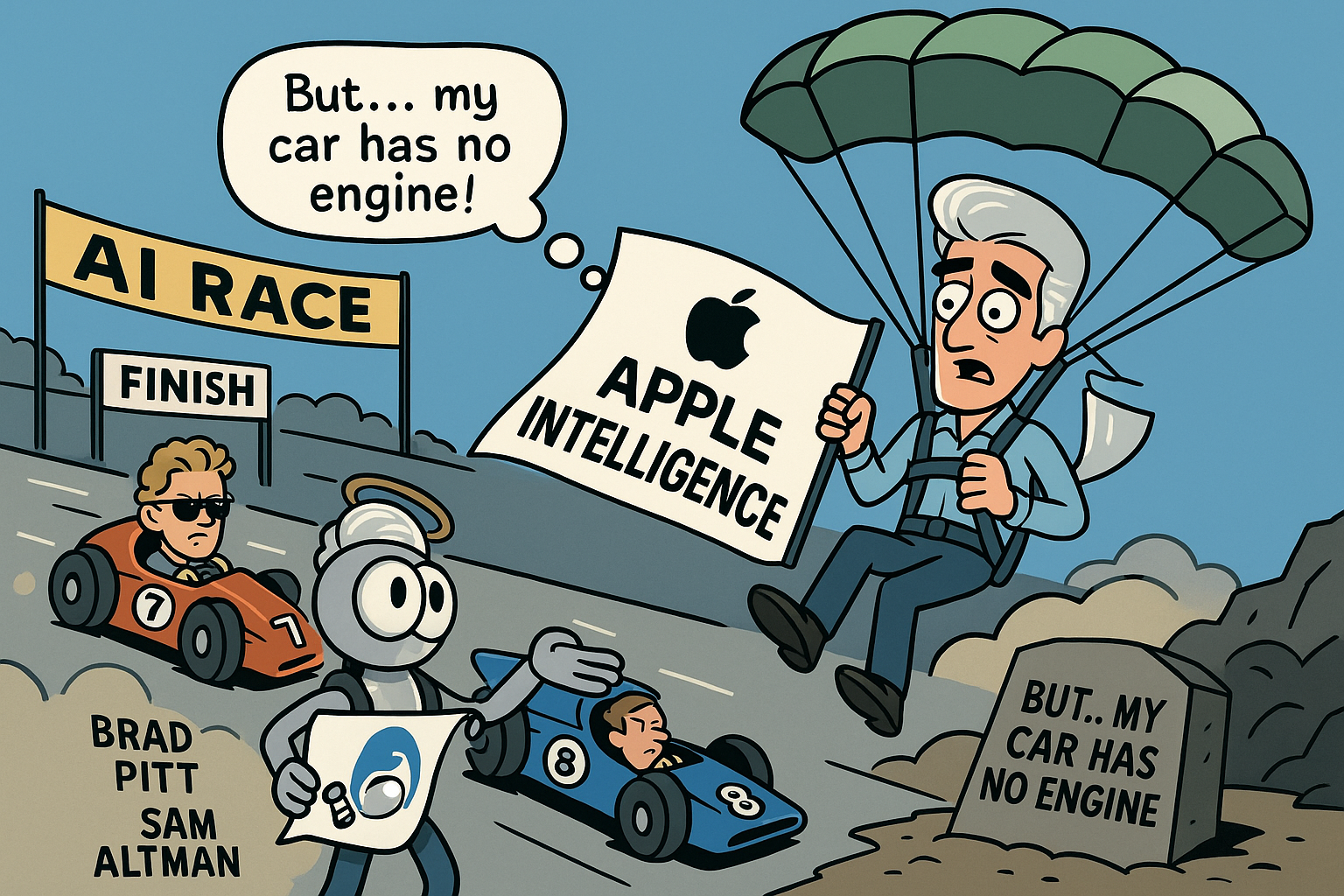

What is Apple’s AI Plan? Answer: A Long Running Joke

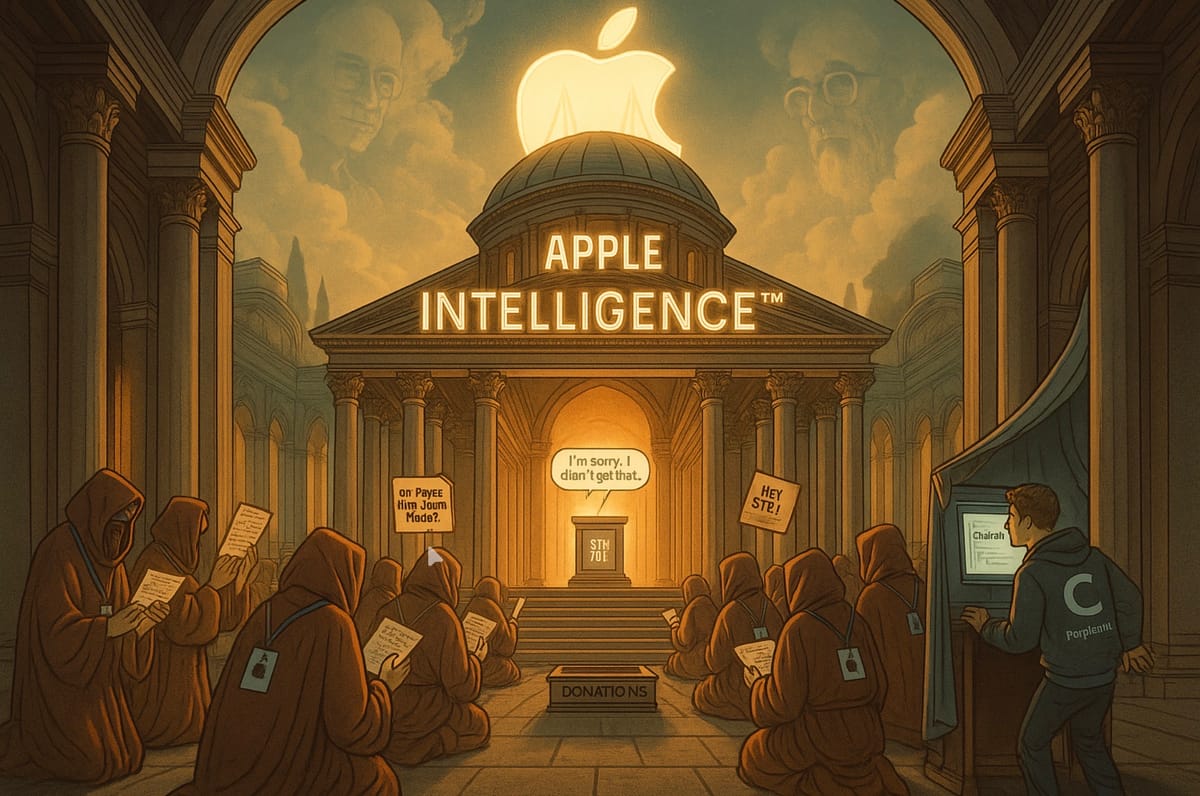

Introduction: The Cult of the Unknowable Plan

In the beginning was Siri. And the Word was… confused. Fifteen years later, Apple’s AI narrative has achieved the rare feat of turning strategic incoherence into an article of faith. Never mind that Siri remains the only assistant in tech history to age backwards a bit like Benjamin Button. Or that Apple, after pioneering the category in 2011, is now licensing brains from companies it once dismissed as “reckless.” The faithful still gather, undeterred saying “they’re biding their time.” Forgive me while I die of old age still waiting for Siri to find me that Brazilian hooker I asked for in 2013.

“Heresy!

”To question Apple’s AI strategy in public is to trigger a peculiar form of forum theocracy where admitting the emperor might be “pants-less” is met with cries of heresy. I just scream “Inafmy Inafamy, You’ve All Got it In-for-me,” as I’m chased with pitchforks by people calling me Machiavellian for daring to question groupthink. I’ve only ever sought out group-thought. Does that make me a groupie or a swinger or just lewd? Ask Perplexity, because Siri just says “sorry, I can’t answer that.”

The former involves blind faith at the altar of Apple, the second engaging in critical thinking instead of critical whining. The irony being Millenials and Boomers are far more prone to whining than Gen-Z, who just want what Gen-X wanted when Apple hit its stride – to “just work.”

Just witness the July 2025 comments on several esteemed Apple blogs: one camp asks – reasonably - why Apple appears years behind in generative AI while rivals monetise, ship, and iterate. The other responds with a blend of mysticism and projection: “None of us know Apple’s AI strategy… and that’s the brilliance of it!” As if investing was meant to be a mystery something akin to an Agatha Christie thriller.

The less Apple says, the more its defenders insist that silence is evidence of depth. Like a tech-sector Zen kōan: “If Tim Cook doesn’t speak of the future, does it mean it doesn’t exist?” Is this Schrödingers AI? It exists and doesn’t exist until you ask if it exists, and when it can neither confirm not deny its existence, leaving you none the wiser but convinced that silence equals wisdom?

From chipmakers to chatbot users, the rest of the industry is living in the AI present. Apple, meanwhile, is still announcing the future. Late year, for next year. Maybe. But Siri insists it was the future, in 2011. Go figure? Time travel is a bitch; ask anyone who’s watched Star Trek. Don’t you just hate temporal anomalies?

And yet the forums echo with the same refrain: “They have the stack. They have the long view. You just don’t understand Apple’s timing.”

This piece is for those who’ve heard that refrain one too many times. An 8000-word tour through fifteen years of Apple’s AI missed calls, executive U-turns, and strategic faceplants. Not a hate crime, more of an observation from someone who has written about Apple for 25 years and invested in it almost as long, predicted the Phone’s exponential success and wrote about the concept of the App Store before the Steve Jobs had left the stage after introducing the iPhone just an hour earlier in 2007.

And a reminder: when someone tells you the silence is part of the brilliance, check their hands. They’re usually holding a blindfold – or an Apple Vision Pro headset with earmuffs on and no battery connected – because they’re on an astral plane with Apple’s AI ambitions, and like a Jedi Knight, don’t need to see their surroundings to slay the nay sayers with their light sabre of truth.

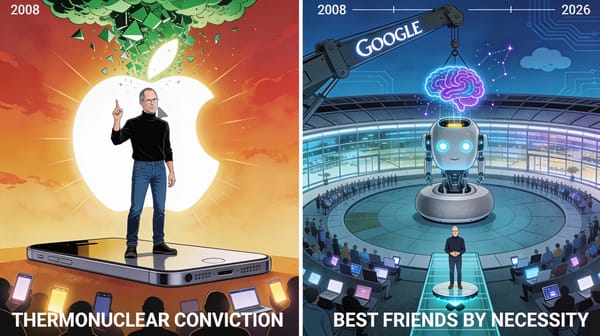

2010–2011: Siri – A Head Start and a Heady Boast

In April 2010, Apple made a prescient move by acquiring Siri, a voice-driven “intelligent assistant” born from SRI International’s AI research. At the time, this looked like Apple staking an early lead in the coming AI interface revolution. By October 2011, Siri was embedded into the iPhone 4S and unveiled with great fanfare.

Apple hyped Siri as nothing less than “an intelligent assistant that helps you get things done just by asking” . The assistant, we were told, “understands what you say, knows what you mean, and even talks back.

“Siri is so easy to use and does so much, you’ll keep finding more and more ways to use it.”

Apple’s event demos showed Siri deftly handling voice queries from weather forecasts to restaurant tips, leaving the live audience in awe as it answered “all queries with aplomb” . It appeared Apple had leapt ahead, introducing the world’s first modern AI assistant on a major tech platform. Tech writers gushed that Siri’s tight integration with iOS was “impressive” and “robust”, heralding “a new era of voice interaction.”

Yet even in these early days, there were hints of trouble in paradise. Siri launched as a beta, and some observers noted its mixed reviews – accurate at understanding speech and context, but limited in flexibility and scope. Still, the prevailing narrative in 2011 was that Apple had a big head start in the AI assistant race.

As Dag Kittlaus – Siri’s co-founder – later remarked, “Siri was the last thing Apple was first on.”

In other words, Apple’s 2011 Siri debut was the first and last time in the past decade-and-a-half that Apple beat everyone else to the punch in AI, or pretty much anything. The stage was set for Apple to lead in AI-driven user experiences… or so it seemed.

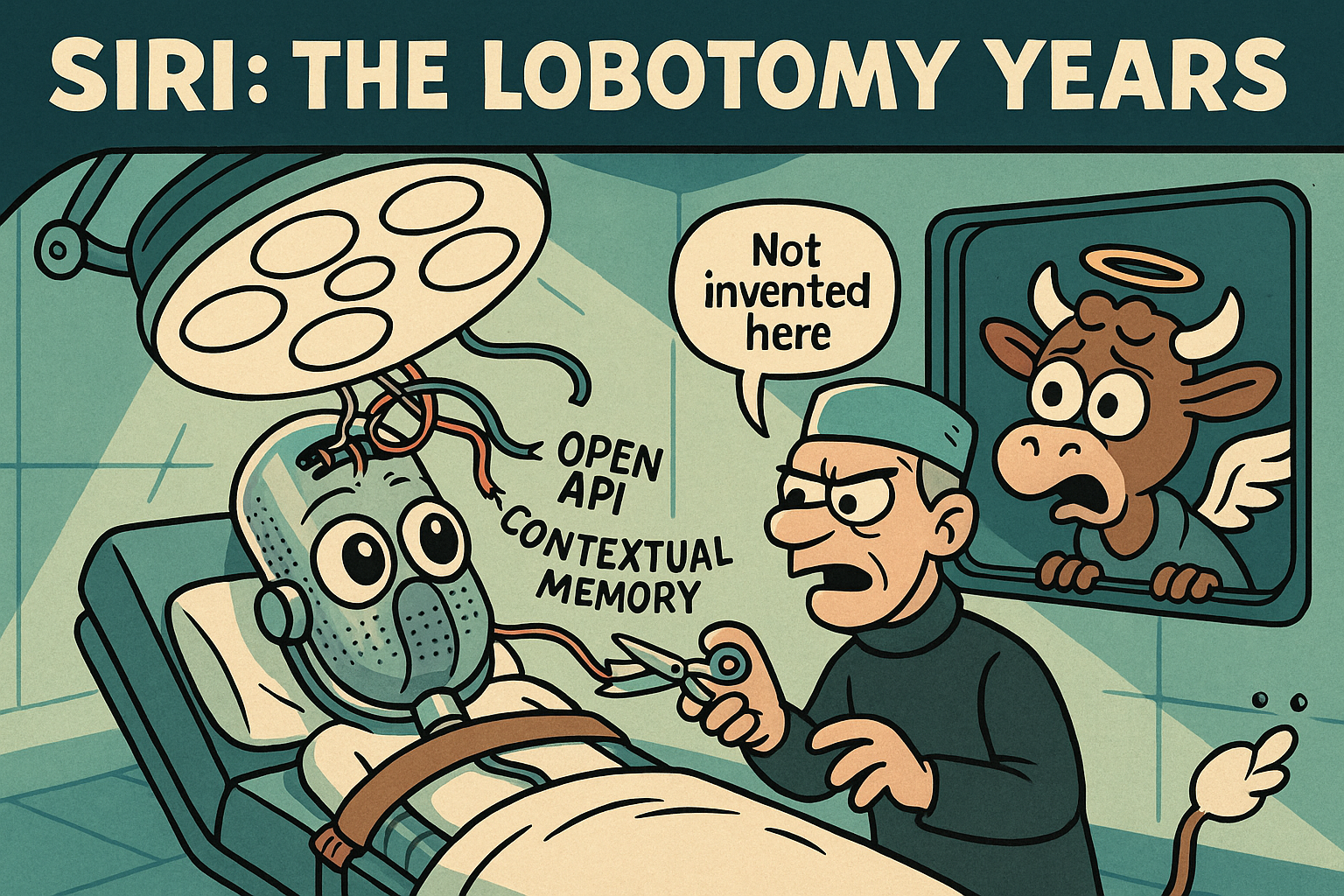

2012–2014: The Lobotomy – Siri Stagnates in a Walled Garden

If Siri’s birth was dramatic, its adolescence was underwhelming. In the years immediately after 2011, Siri gained new jokes and a few more languages, but “minimal improvement over time” was the theme . In fact once the sex and abortion crowd became involved, it was curtains. Siri used to be able find you an abortion clinic at launch and locate the nearest escort agency with a great rating too. Naturally, these must-have features and many others were quickly stripped from Siri, and the slow lobotomy began.

Internally, Siri’s development slowed to a crawl. Apple’s legendary penchant for secrecy and control began to work against rapid AI progress. Third-party integrations that Siri originally boasted (as an independent app) were largely stripped out or tightly boxed in once inside Apple’s walled garden or by now, Apple would have had the equivalent of Agentic-AI, a decade before anyone else.

Meanwhile, competitors started playing catch-up: Google unveiled its own voice assistant and Amazon’s Alexa sprang onto the scene in 2014. Siri, once a wunderkind, didn’t seem to be learning fast enough and the price of integrating where you could, with zero, demanded a heavy cost in an Apple Approval Process. Naturally, developers dropped Siri (and later HomeKit) and went all-in on Alexa.

Siri and Apple were not playing well together

Inside Apple, engineers grew frustrated. Reports years later would reveal that Apple’s AI and Siri division was riddled with organisational dysfunction and cautious leadership. Former employees recalled an “overly relaxed culture” with “a lack of ambition and appetite for taking risks” on Siri’s future.

Siri’s leadership oscillated on strategy:

One plan to give Siri a true “brain” had called for both cloud-based and on-device AI models (cheekily code-named “Mighty Mouse” and “Mini Mouse”). That plan was scrapped in favour of yet another approach, then another . This internal indecision “frustrated engineers” and even led some to quit (sound familiar right). Within Apple, Siri came to be seen as a “hot potato”, passed from team to team with no one able to substantially elevate it or even wanting to be near it. Siri was toxic! Morale and confidence in Apple’s AI vision eroded.

Engineers jokingly dubbed the whole AI/Machine Learning group “AIMLess,” a dark pun on Apple’s supposedly aimless AI strategy .

Does this sound familiar to anyone who has followed first the cack-handed development of the Vision products (erm product to date), and then Apple “AI” itself? It’s no mistake – they’re all a mashup of the same people and teams. Meanwhile Siri’s original team had long since quit in disgust.

Publicly, Apple rarely admitted any issue. But by 2015, savvy observers noticed Siri falling behind. In 2016 and 2017, the tech press ran stories bluntly stating that Siri “lacked innovation”, citing Siri’s limited skills, poor reliability, and Apple’s cloud-shy, privacy-first approach as key reasons .

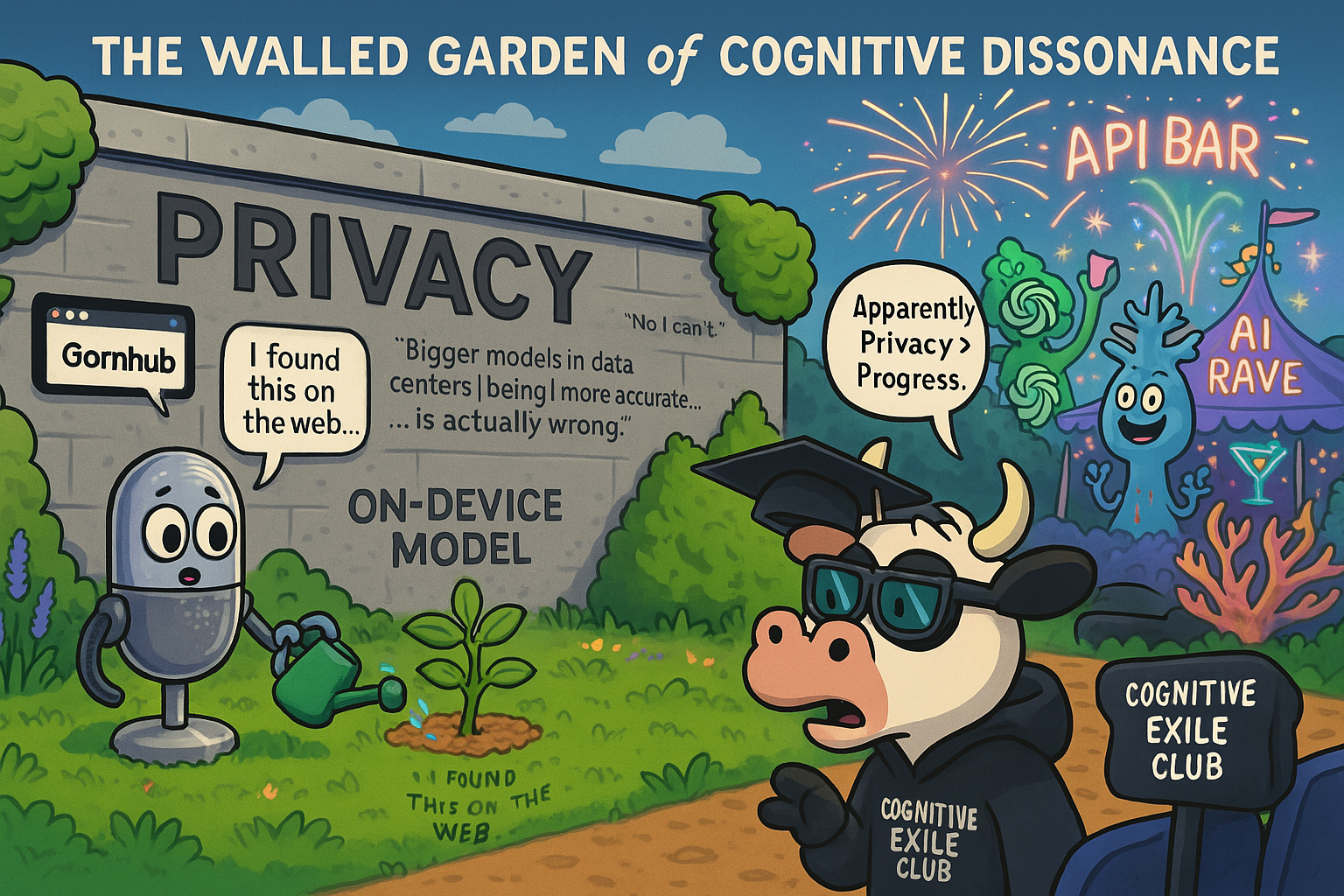

Apple’s insistence on on-device processing and strict privacy (again – any resonance here?) - values that had initially differentiated Siri - were now blamed for stifling its development. In essence, Apple had lobotomised its AI: by keeping it in a tightly controlled box, Siri wasn’t learning from big data or user feedback like Google’s Assistant was.

Tellingly, Apple’s design team even rejected a feature for users to report Siri’s mistakes, because they wanted Siri to always “appear ‘all-knowing’.”

This almost satirical obsession with appearing perfect meant Apple literally prevented Siri from getting the real-world training it needed to become better. Siri was, to all intents and purposes, boxed, as was the concept of LLMs and true genAI.

Outside the Reality Distortion Field, Siri’s star was fading. One former Siri engineer later explained that Apple’s refusal to leverage cloud data led to competitors leapfrogging Siri:

“Apple could not collect any user data that could be used to improve Siri while the other assistants went right on collecting”, and so Siri fell behind.

But on Apple fan forums, the faithful held the line:

Don’t count Apple out, they argued – Apple was merely biding its time, refining its approach in secret. Surely, Apple had a master plan for Siri that outsiders just couldn’t see yet? Right? “Oh sing, all ye faithfull, joyful and triumphant… [..] Praise the Lord.”

2015–2018: Rivals Leap Ahead, Apple Hits Snooze

By the this time, Amazon’s Echo and Alexa showed an explosion of AI assistant usage in homes, and Google Assistant’s deep-learning-powered smarts made Siri look quaint. Alexa could plug into thousands of skills; Google’s assistant benefited from the company’s vast search and knowledge graph. Siri, in comparison, remained limited – able to set a timer or send a text (it’s biggest party trick being able to pair with a Philips Hue lighting hub), but clueless in many domains and prone to “faltering at simple hurdles” in understanding and the obvious post-hoc “safety barriers” Apple had erected around Siri’s abilities..

Even Apple’s most loyal customers joked (with a dose of pain) about how often Siri responded with “I’m sorry, I can’t help with that”.

Under growing pressure, Apple tried some course corrections. In 2014, Apple had quietly acquired a string of AI startups (Perceptio, VocalIQ, Turi) to bolster Siri’s speech and reasoning. In 2016, it opened SiriKit to let a few third-party apps integrate (in very narrow ways – think ride-sharing or messaging). Siri did improve incrementally; by 2017 it gained a slightly more natural voice and even a rudimentary follow-up question ability .

But these enhancements were baby steps. Apple’s competitors were sprinting – and some of Siri’s original creators decided they’d be better off outside Apple’s walls. Notably, Dag Kittlaus and Adam Cheyer (who had left Apple soon after Siri’s launch) built a next-gen assistant called Viv, aiming to do all the things Siri couldn’t. (Viv’s demo in 2016 showed it handling complex multi-step queries live – an implicit rebuke of Siri’s stagnation. Samsung acquired Viv to power its Bixby assistant, in a twist of fate. Apple users of course, dissed Bixby because it wasn’t Siri, but ironically, Bixby was more Siri by this time, than Apple’s Siri).

Apple’s leadership at the time gave little outward indication of panic. The company line, if pressed, was that Siri was getting smarter and that Apple was “innovating under the hood.” But there was a conspicuous lack of strategic clarity from Apple about AI. In hindsight, insiders describe this period as one of complacency and even dismissal.

According to former employees, Apple executives repeatedly dismissed the idea of Siri having extended back-and-forth conversations (what we’d called a modern LLM like ChatGPT), calling the very idea “gimmicky” and “difficult to control.” Ironically, that “gimmick” – open-ended conversation – would become the hottest thing in tech a few years later with chatbots. Apple could have been working toward it; instead, higher-ups waved it off, seemingly content for Siri to remain a polite but dumb voice command system.

In 2018, a tacit admission of Siri’s struggles came in the form of a high-profile hire. Apple poached John Giannandrea, Google’s chief of AI and Search, in a “major coup” aimed at finally getting its AI house in order . Giannandrea was given the lofty mandate to run Apple’s machine learning strategy and, tellingly, was made to report directly to CEO Tim Cook – a sign that AI was (on paper) now a top-level priority. Catching up was very much the goal.

Even the New York Times phrased Apple’s move as a bid to “catch up to the artificial intelligence technology of its rivals.” With Giannandrea on board, Apple observers expected Siri to get a new brain and direction.

And internally, Giannandrea did push. He found Apple’s AI efforts splintered across teams and sought to centralise them . But culture is hard to change: one former engineer noted that Giannandrea’s Google-style approach (iterative research, flexible deadlines) clashed with Apple’s rigid product-cycle mentality . Turf wars emerged.

For instance, Apple’s core software team under Craig Federighi reportedly kept building its own AI for features like image recognition, rather than rely on Giannandrea’s group. Wars were rising as fast as the woke TERF wars were, and just as toxic to teamwork and inclusivity (if you don’t know what a TERF is, ask your nephew).

And Apple, despite its riches, was curiously behind in AI infrastructure:

- teams lacked access to large-scale cloud compute and had to beg, borrow, or rent cloud GPUs to train models because

- Lucca wouldn’t sign off on any investment in its own, let alone after a shouting match with Tim Cook, begrudgingly agree to throw some more money at Apple Silicon which arguable saved the company.

- By the end of the 2010s, Siri was still Siri – competent at a narrow set of tasks, embarrassingly blank on many others.

- The AI revolution seemed to be passing Apple by, even as the company poured billions into R&D.

2019–2021: “We’re An AI Leader, Just Don’t Call It AI”

Outwardly, Apple continued to project confidence – sometimes to almost comical effect. In 2020, amid growing buzz about the power of cloud-trained AI models, Apple’s AI chief John Giannandrea gave an interview to argue Apple’s philosophy: bigger isn’t necessarily better, and on-device AI was the superior approach.

“Yes, I understand this perception of bigger models in data centers [being] more accurate, but it’s actually wrong,It’s better to run the model close to the data… it’s also privacy preserving.” - Giannandrea.

Ahhh. The Walled Garden and Privacy again! Never mind that Safari could show you how to make a shoe bomb, or display PronHub without any safety or privacy concerns at all. With Siri, Privacy has to be paramount again, for some reason.

Apple’s party line was clear: we do AI our way, focused on privacy and tightly-coupled hardware, not sucking up your data to some giant cloud brain. Apple believed (or at least wanted us to believe) that this would ultimately yield better results than the Googles and Amazons of the world. And “keep you safe, y’all hear?”

To critics, this sounded like rationalising. As one commentator dryly noted, “Apple was saying this all along but no one really believed them because it sounded like excuse making.” Indeed, by 2020 Siri’s shortcomings were impossible to deny, and pointing to Apple’s noble privacy stance only went so far.

It’s not that Apple hadn’t woven AI into products – it had, in quietly extremely useful ways. The same year Giannandrea defended Apple’s small-model approach, Apple’s PR highlighted how AI/ML powered many features: from fall detection on Apple Watch to handwashing timers to the iPhone’s neat trick of grouping your photo memories. In Apple’s view, it was an AI leader – just one that eschewed the glitzy “AI” label.

“We label [features] by their benefit to consumers, not as AI,” Tim Cook would often say.

During the WWDC keynotes in these years, Apple would tout “Neural Engine” improvements and “machine learning” features, but conspicuously avoided uttering the letters “AI.” In fact, at WWDC 2023 – at the peak of global AI hype – Apple didn’t mention “AI” even once in a two-hour keynote . This was a deliberate contrast to rivals: that same spring, other Big Tech CEOs could hardly shut up about AI in their presentations (Microsoft, Google, et al. mentioned “AI” dozens of times in every event). Apple’s choice to sidestep the term was both philosophical and tactical.

As one analysis put it, “Apple likes to make the hype, not glom onto it.”

AI was a crowded buzzword, and Apple wasn’t about to jump on someone else’s bandwagon – at least not publicly. Behind the curtain is was crapping itself.

Privately, however, the winds were changing. The limitations of Apple’s conservative approach were piling up. Around 2021, an ambitious in-house project code-named “Blackbird” had tried to overhaul Siri with a more powerful AI that could even work offline .

Insiders who saw demos of this revamped Siri were blown away – it was faster, understood conversational queries far better, and could tap into third-party apps in a flexible way . It was the Siri that could have been, in 2015 But when Blackbird was pitted against a more modest plan (moving a few Siri processes on-device for speed, dubbed “Siri X”), Apple chose the lesser upgrade . The bolder vision was shelved, and in 2021 Apple rolled out the limited Siri X enhancements (basically, Siri handled some requests locally to reduce latency – nice, but hardly game-changing).

This decision was emblematic of Apple’s AI journey: caution won out over ambition. As a result, by the early 2020s, Apple found itself behind in the very “assistant” game it had started ten years previously, with not a single mea culpa. An internal culture of perfectionism contributed to this paralysis.

“Apple has long prided itself on perfection in rollouts – a near impossibility with emerging AI models,” noted one AI expert, pointing out Apple’s limited tolerance for AI’s inherent messiness (like the “hallucinations” and errors common in large models) . “They won’t release something until it’s perfect,” which is fundamentally at odds with the reality that you have to launch imperfect AI to gain data and improve. Like perhaps, that you needed to launch an imperfect and underspecc’d overpriced Macintosh in 1984 to start the momentum towards incremental improvement. And keep on shipping.

This was the state of play by 2021: Apple insisted it was doing great things in AI, just quietly. The true believers in Apple’s community echoed this, often with near-reverent faith:

“Apple isn’t behind; it’s just secretive. One day they’ll reveal something revolutionary on their timetable.”

To outsiders, and increasingly to many insiders, it felt like whistling pat the graveyard. To most Apple users, not even exposed to AI not intelligent assistants, the notion of genAI or LLMs was something they weren’t mostly even aware of until 2024.

2022: The Tipping Point – ChatGPT Makes Apple Sweat

Then came late 2022, and with it, the generative AI explosion. OpenAI’s ChatGPT burst into the public consciousness in November, dazzling hundreds of millions of users with AI conversations and creations that felt leagues beyond Siri’s canned quips 9apart from Apple users, who literally never saw or heard it coming). For Apple, this was an earthquake. The inconvenient truth was that none of this tech had come out of Apple or worked on Apple’s terms. It was born in the cloud, from research labs and startups unencumbered by Apple’s self-imposed constraints. And it was catching on faster than any product since the iPhone – ironically, on Apple’s own devices (as third-party apps or through web browsers) but not under Apple’s control. Can you see a 15 year old trend here like I can?

2023 - Apple sees and Exodus of AI engineers leave for Google.

Inside Apple, alarm bells rang. The company held an internal AI summit for employees in early 2023, essentially to brainstorm and reassure staff that Apple did have a plan. But cracks in the facade were evident. In fact, a trio of engineers had quit Apple not long before, specifically to go work on large-language models at Google . These engineers had been helping modernise Siri’s search, but grew frustrated waiting for Apple’s green light on LLMs – so they left to pursue that tech elsewhere . That kind of brain drain was new for Apple, which usually had the pick of talent. It underscored how AI researchers viewed Apple as behind the curve on LLMs. And of course with Apple’s loss of its top tier AI and LLM team to Meta in July 2025, history is just repeating itself.

Even Apple’s top brass experienced a come-to-Jesus moment. Over the 2022 holiday break, Craig Federighi – Apple’s software SVP – reportedly spent time playing with GitHub Copilot (the AI coding assistant powered by OpenAI). The story goes that this hands-on encounter turned Craig into an AI “convert” . Seeing what a well-trained LLM could do – even in the niche of code – flipped a switch while headbanging to heavy metal and can of hairspray in one hand. If true, it’s stunning: one of Apple’s most senior execs truly appreciated the potential of generative AI only after a third-party product opened his eyes in late 2022. It hints at just how skeptical or indifferent Apple’s leadership had been until the world forced them to pay attention.

By early 2023, we began hearing uncharacteristic whispers from Cupertino acknowledging they’d missed a step. Tim Cook, in an earnings call, carefully pushed back on the notion that Apple was “behind” in AI, pointing out that “AI is literally everywhere in our products” – from fall detection to photo sorting . He confirmed Apple had “work going on” in generative AI (obviously) but played coy on details . “You can bet we’re investing… quite a bit,” Cook insisted, “we’re going to do it responsibly… you will see product advancements over time where those technologies are at the heart.” It was a mild-mannered, don’t-panic response: trust us, we’re on it, just in our own way.

Meanwhile, reporting suggested Apple was spending on AI R&D at an unprecedented rate – “expanding its budget to millions of dollars a day” – and scrambling multiple teams to develop large language models and new Siri capabilities, to the enormous detriment of other engineering projects. Still, the reality was that Apple had no public answer to ChatGPT or the new Bing or Google’s Bard. In the spring of 2023, even as Microsoft plugged an AI copilot into Windows and Google baked Bard into search, Siri remained as she ever was – winking back at you with a cheery “I found this on the web” when asked anything remotely challenging and “if your ask me again, I can send the results to your iPhone.”

In private, Apple employees were candidly negative about Siri’s prospects. One internal joke had Siri’s acronym standing for “Should I Really Invest?”, mocking the assistant’s dim future. A New York Times expose in 2023 described Siri’s backend as built on such a “clunky” old codebase that adding even basic features took engineers weeks, leading some inside Apple to call Siri’s architecture “a big snowball” that they feared to disturb . Little wonder that by 2023, “Siri [was] widely derided inside the company for its lack of functionality” .

Apple’s AI culture, once “think different,” had become risk-averse to a fault – more about avoiding embarrassment than shooting for the stars. As one former employee lamented, “the most mocked of all virtual assistants” had become “an embarrassing product for Apple”, and Apple’s leaders seemed disconnected from how badly Siri was viewed .

2023–2024: Apple Intelligence™ – Rebranding (Badly) and Rethinking (Belatedly)

Facing this pressure cooker, Apple did what it often does: went back to the drawing board for the fifth time and prepared a marketing-fueled reboot. At WWDC in June 2024, AI was finally given a front-and-center spotlight – albeit rebranded with an Apple twist.

“This year’s keynote revealed Apple Intelligence, the personal intelligence system that combines the power of generative models with users’ data,” Apple proclaimed . In other words, Apple was (at last) going to integrate LLM-powered smarts into Siri, iOS, macOS, and its apps – but with a heavy emphasis on privacy and personalisation. Apple executives – from Tim Cook to Craig Federighi – spent nearly 40 minutes of WWDC 2024’s presentation talking about AI (whoops, “Apple Intelligence” – an oxymoron destined to be mocked for its misnomer for years ) features.

It was a stark change in tone. After avoiding the term AI for years, Apple now embraced it with almost goofy enthusiasm. Federighi even went so far as to call Apple’s approach “AI for the rest of us” , positioning it as the easy, safe AI that everyday users (not just techies) would benefit from. To drive home the rebrand, he quipped on stage that AI now stood for “Apple Intelligence” – a bit of cheeky hubris that no one missed, and which has come back to haunt it with a vengeance.

Observers couldn’t help but roll their eyes a little. To some, Apple’s sudden AI cheerleading felt like the late arrival that it was. As one analyst put it,

“Apple Intelligence will indeed delight users in small but meaningful ways… it brings Apple level with, but not head and shoulders above, where its peers are at.”

The stock market was underwhelmed: Apple’s stock actually rose and then dipped on the WWDC24 announcements and then looped the loop all through 2024, a sign that investors heard more marketing sizzle than true steak. After all, by mid-2024, consumers had seen what real AI magic looked like (ChatGPT writing essays, Midjourney creating art, etc.), and Apple’s showcase – Siri finally being able to compose an email or summarise your messages – was playing catch-up, but back in beta circa 2011. Again. For Siri, it’s been one long Groundhog Day.

“There isn’t anything here that propels the brand ahead… just incrementalism,” remarked a Forrester analyst bluntly. Of course to Apple acolytes brought up on no-AI-is-good-AI, Apple AI was a revelation and was a sign the company would now dominate the way it might have done in 2011 when it bought and then lobotomised Siri. To everyone else, it was a beta launched with a 12 month timeline and a feature set about as real as Federeghi’s hair do after a sky diving session.

To be fair, Apple did introduce some cool AI-powered features in 2024: you could ask Siri to automate complex tasks across apps, or have it change the tone of a draft email. And critically, Apple confirmed a partnership to integrate OpenAI’s ChatGPT into its ecosystem because it didn’t have its own LLM to ship, nor the server power to run it, even if it had. That was nearly unthinkable a couple of years prior – Apple relying on an outside AI service at the OS level. If they had done, Siri wold have been up and running as an Agentic assistant back pre 2020 leading the world and by now, Safari would be making Perplexity’s Comet look positively old fashioned indeed as of the other way round.

The tie-up underscored how far behind Apple’s own LLM efforts were that it needed OpenAI’s help. (This tie-up wasn’t without controversy: privacy hawks noted the irony, and Elon Musk snarked that he’d ban Apple devices at his companies if Apple baked ChatGPT into the OS .) Apple’s response was to tout a new “Private Cloud Compute” system – basically, running these AI queries through Apple’s servers in a way that supposedly doesn’t violate your privacy. The message: Yes, we’re using ChatGPT’s brains, but don’t worry, we wrap it in Apple privacy pixie dust. Apple was trying to square the circle: deliver the wow of generative AI without sacrificing the privacy principles it held onto (and which had limited Siri’s growth). Which begs the question:

Q: Why didn’t they just do that’s 10, or 5 or 3 years ago?

A: Because there was no special plan. They didn’t know what they were doing, as this article had painfully explained to anyone still under any illusion Apple has ever understood

Craig Federighi and AI chief Giannandrea hit the PR circuit to elaborate on Apple’s philosophy. In interviews they stressed Apple’s “different approach”: rather than generic chatbots that “don’t know enough about you”, Apple Intelligence would leverage a user’s personal data (securely) to be more truly helpful.

Federighi pointed out that a normal chatbot might know a lot of facts, but “wouldn’t know you have a son or that you drive to work,” whereas Apple’s on-device models do. In essence, Apple pitched personalisation as its ace in the hole – your iPhone knows you (in a private way), so it can be a smarter assistant for tasks specific to your life. They also continued to throw a bit of shade at the competition: Federighi commented that open-ended chatbots are great for “exploring AI and its weakness,” but Apple was “focusing on experiences we knew would be reliable”.

Unfortunately, Apple couldn’t deliver on any of its promises, sacked half its advanced engineering team, reorganised and reshuffled the rest, and then went into 2025 holding their brass monkeys tight after a public mea culpa in a town hall meeting and promising things were under control now.

The is a trend forming, No?

It was the old Apple pragmatism: we’re not doing a Bing-like chatbot that can go off the rails; we’re cherry-picking use cases (like email drafting, photo cleanup, etc.) where our AI will shine and not embarrass us. And it won’t do anything we don’t approve of, except GenMoji which won’t accept any “happy penis” prompts.

This curated approach felt on-brand, if a tad self-serving, given that Apple was in no position to offer a full-blown ChatGPT competitor anyway. And so the narrative among the Apple-faithful became: Apple isn’t behind, it’s just different. They’re doing AI the right way, not the fast way. On forums like Phil Elmer-DeWitt’s Apple 3.0 or r/Apple on Reddit, one could find arguments that Apple’s secrecy conceals a grand plan, and that Apple will ultimately deliver a more polished, privacy-safe AI when it’s ready. We just have to trust Apple’s timing. This argument, however, was starting to wear thin by late 2024. As one Redditor skeptically noted, “if Apple had been working on AI for years in the background, there wouldn’t be all these reports of Apple being behind. For all we know, they started last year.” Another replied that Apple can afford to wait – “they’re still selling devices in the millions… they can be behind and catch up later.” Perhaps, but others pointed out that this logic was dangerous: Apple’s dominance today doesn’t guarantee it tomorrow if they miss the next big platform shift.

AI, many argue, is that next shift. Even former Apple design chief Jony Ive chimed in publicly in 2023, cautioning that a revolutionary new AI interface could render the smartphone (and thus the iPhone) far less central – essentially, a warning that Apple can’t miss the boat on AI. Arrivals like Comet’s AI Agentic OS browser, Comet, and ChatGPTs Browser and task-integrator, “Agent Mode,” are alreasdy delivering what Siri promised 15 years ago and Apple promised again over a years ago. They’re here, now, running on top of MacOS. Where’s Apple AI and Siri? In a lab somewhere.

Meanwhile, a comedic subplot at WWDC 2024 showed Apple’s curious blend of bravado and insecurity. In the keynote’s pre-recorded skits, Apple leaned into self-deprecating humour more than ever – Craig Federighi jumping out of a plane in a tongue-in-cheek stunt, cracking “dad jokes” about AI, etc. The presentation oscillated between over-the-top humour and hyper-serious demos.

Some pundits, like Macworld’s editor, found it “a bit… cringe” and theorised that the “mirth-heavy” showmanship was there to paper over the lack of truly groundbreaking AI announcements .

“Ultimately, my suspicion is that [WWDC24’s] heavy banter reflected the inconvenient truth that Apple Intelligence has been a bit of a stinker,”

He wrote, suggesting Apple tried to “fill up time with horseplay and tomfoolery” rather than “make a load more promises it can’t keep” . In other words, all the “Apple Intelligence” pomp couldn’t fully hide that Apple’s AI strategy was still half-baked.

2025: Behind the Curtain – Delays and Do-Overs

One year after the big Apple Intelligence rollout, reality set in. By WWDC 2025, Apple had to acknowledge that many of the grand AI features it teased were dead. In a moment of candour unusual for Apple, Craig Federighi told the developer audience that some of the most anticipated Siri/AI improvements “needed more time to reach our high quality bar”, and would only be delivered “in the coming year” . He framed it as continuing to “deliver features that make Siri even more personal,” but the subtext was clear: those cool generative AI abilities announced with fanfare in 2024 had totally fallen short of expectations and caused a complete re-write to be triggered – including Apple’s creaking OS stack which just couldn’t cope with the retrofitted plumbing needed and the Siri team were literally stuck with a system whose plumbing had just cracked wide open and simply would not plug into Apple‘a OS stack even though - allegedly - “it works really well in isolation.” Well, how useful. Apple execs effectively hit the pause button, punting several big items to spring 2026. The timing said it all – Apple was asking for patience until at least the WWDC2026 timeframe, a tacit admission that their initial plan hadn’t panned out as hoped (otherwise know as a “flop.”) Meanwhile they made a big deal about a bunch of acronyms (FMF, PCC) to show they had a plan, even if not a strategy, and really just rolled out as repeat of the Blackbird project they’d shelved back in 2021 - in other words, a hybrid between on-edge and server-based AI but with the need for a brand new Siri to make it work now.

On stage, Federighi tried to put a positive spin. For 45 seconds. That was all the time Apple new LLM received in 2025. He noted Apple did ship some AI goodies – email summarisation (verdict:awful), auto-generated replies (verdict: perfunctory), photo cleanup tools (verdict: ok) – and that they took an “extraordinary step forward for privacy and AI with private cloud compute to keep user data safe.” But even he had to immediately add, “we’re continuing our work… this needed more time” . In corporate-speak: sorry folks, we know it’s not all there yet and went on to say in a later interview2 that Siri wouldn’t see the light of day timeline “May 2026.” Miraculously though, Apple released an open-source LLM of their on-edge work and server-based efforts on HuggingFace. People may wonder how they were able to do this, when they hadn’t deployed it in 2023 or 2024. The answer is simple: The project had been developed, worked, and was axed and boxed. Apple simply unboxed it, and started work on it again. In some way, you could say this is a testament to what Apple could achieve. The truth though, is that in spite of this industry-leading edge, Apple had erred strategically and boxed the most important development in the last decade, despite having poured years of resources into it and having it launch ready, only to be nixed in favour of a “dumber, safer Siri.”

Apple’s 2025 message was essentially “incremental for now” – and “we’re making our models “more capable and efficient”, extending them a bit more across our ecosystem, and (notably) “opening up access for any app to tap into the on-device model” . That last part was pitched as a big shift: Apple announced a new foundation model API so developers could use Apple’s local LLMs in their apps. Which is exactly what Siri, in 2011 offers already via its third-party plug in infrastructure 15 years previously, before being lobotomised.

They even demoed a third-party app generating a quiz from your notes using on-device AI . It’s a smart move – basically seeding an Apple-flavoured AI ecosystem. But the fine print: this was a teaser of things that “will not be reality until, about, May 2026 Federeghi said.”

Yes, Apple explicitly hinted that its full AI vision was at least a year out, a bit like it had when it acquired Siri in 2011, a full 15 years earlier. They were retrofitting all of the features Siri had already shipped with a full decade and a half ago. Now that’s recursive right! History may not repeat, but it certainly rhymes.

Industry watchers didn’t miss the irony. Apple, the king of polished launches, was now openly asking for more time on a flagship initiative.

As the research firm Constellation put it, “Apple Intelligence, outlined in 2024 with great fanfare, has fallen short… [WWDC 2025] highlighted that Apple needs more time to make it work well.”

The contrast with how Apple usually operates (announce after you’ve perfected something) was stark. By contrast, this felt almost like Microsoft or Google from 2005 – shipping a preview and improving it live. It was as if Apple had been forced out of its comfort zone, compelled by competitive pressure to show its AI hand early, and then had to eat a bit of crow when the execution wasn’t failing to live up to the hype but was now the subject of a class action lawsuit for allegedly telling people then needed to upgrade to an iPhone 16 in 2024 to received the wonders of the Apple Intelligence, or forever be stuck on their “dumb” pre-iPhone 16 brick. Upgraders were not happy. Apple is in court, yet again. The D

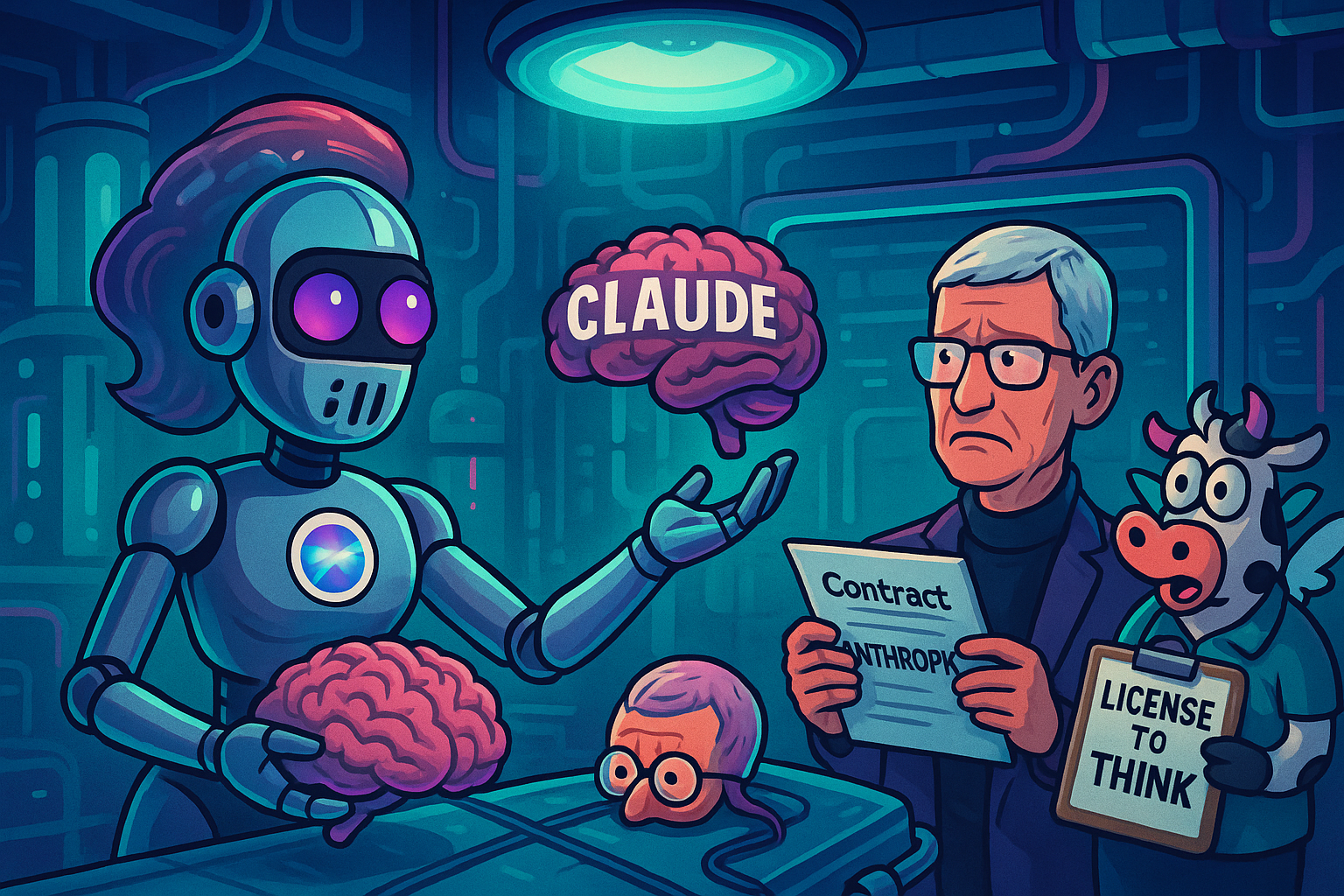

Adding to Apple’s humbling: reports emerged that Apple was exploring deals with third-party AI firms to fix Siri. In mid-2025, Bloomberg and Reuters reported that Apple was testing OpenAI’s and Anthropic’s models to potentially “sideline its own in-house models” for a new Siri, in a “blockbuster move” to turn around its “flailing AI effort.” Rumours flowed of a Perplexity deal, much covered in depth in a series of three articles here on this site, tommo.fyi, much quoted on CNBC and by Dan Ives of Wedbush Securities a week later).

In fact, this initiative was started after Siri was taken away from Giannandrea’s oversight and given to a new executive (vice president Mike Rockwell, of Vision Pro infamy) . The reason? The “tepid response” to Apple’s AI announcements and “Siri feature delays” had spurred a leadership shake-up . That’s right – Apple re-orged its Siri team because progress was too slow. Rockwell promptly had teams run a bake-off: Apple’s internal LLM vs OpenAI’s vs Anthropic’s. According to leaks, Anthropic’s Claude model actually won the tests for Siri’s needs . The upshot: Apple began talks to license an external AI model (Claude) to power Siri, an almost unthinkable scenario that underscores how much Apple’s own AI lagged . As one commentator wryly put it, it might be time to give Siri that long-overdue “lobotomy” – swapping in a ChatGPT- or Claude-powered brain while Apple builds its own from scratch.

This was the plan the Apple defenders had not foreseen: that Apple’s secret AI master plan might amount to buying or renting someone else’s AI to fill the gap. It’s a pragmatic path – better to use the best available tech (even if not invented at Apple) than to keep lagging. But it’s also a profound psychological shift for Apple, a company that long touted its vertical integration and home-grown innovation. The Apple of 1996, ironically under Gill Amelio, saw the wisdom in buying in necessary expertise from the outside including the return of Jobs, but the Apple of 2010 would likely never have admitted needing an outside technology to make its flagship user experience competitive. It may have bought in Siri, but that was in an era when no Siri-equivalents existed. The Apple of 2025 appears ready to do just that but seemingly can’t bring itself to and is playing for time.

In my opinion, the time to make such an announcement would be mid to late August, just prior to the iPhone 17 release, to maximise upgrade sales and boost Apple going forward into Christmas. If there’s any acquisition news, I’ve long written I’d expect it to be post Q3-report but pre-iPhone 17 launch.

Conclusion: No Mystery Plan – Just Fifteen Years of Missed Opportunities and Squandered Time Dithering.

Fifteen years on from Siri’s acquisition, Apple finds itself in an unusual and uncomfortable position: a follower in a domain it arguably pioneered. The history is almost Shakespearean in irony.

In 2011, Apple proudly unveiled the future of AI-driven user interaction with Siri - a visionary acquisition which literally handed Apple the future. It let that future pass it by while constantly fiddling with it. The intervening years are a study in how a mix of hubris, caution, and secrecy can cause a giant to stumble in a race it started but wandered off distracted into the forest before the race had even begun, forgetting the vision which drove the original acquisition.

Apple’s executives and its devoted fans have long insisted for 15 years that Apple wasn’t lagging, it was just choosing its moment. They insisted Apple “thinks different” about AI, that it values privacy and quality over first-to-market gimmicks. They’re, quite obviously, deluded, and Apple have done a mea culpa.

There is some truth in Apple’s noble motives. Apple’s focus on privacy is genuine, and its reluctance to put out half-baked tech is usually a virtue. But in the case of Siri and AI, those same values became straitjackets. By ignoring the potential of large-scale data and dismissing conversational AI as a toy, Apple forfeited over a decade of lead time. Instead of quietly leapfrogging the competition with a secret AI breakthrough, Apple was quietly, even unknowingly, leapfrogged by others, playing secret catchup by deflection and ignore user trends and industry evolution in an act of hubris which not even King Canute could have mirrored.

The quotes tell the story starkly.:

In 2011, Apple heralded Siri as: “a revolutionary “assistant… you’ll keep finding more ways to use. It will get to know you.”

(except it was never allowed to - and ChatGPT got their first with it’s “memory and context” functions enabled in 2023, 12 years later)

A decade later, Apple’s own engineers joked that Siri was an unwanted stepchild passed around teams, with “no significant improvements” to show.

Apple’s top AI exec in 2020 insisted the big-data, big-model approach was “technically wrong.”

Only for Apple in 2023 to realise those big models were indeed the way forward, belatedly pouring billions into exactly what it once downplayed.

Apple’s leadership rejected chatbots as “gimmicky” , then turned around and tried to bolt ChatGPT onto iOS when it became clear consumers wanted exactly that kind of AI magic . At WWDC 2024, Craig Federighi enthusiastically pitched “AI for the rest of us”, claiming Apple’s AI would empower users and “isn’t the same as we’ve seen before” . By WWDC 2025, Federighi was calmly explaining why those promised empowering features weren’t ready yet and asking for patience until 2026 . The about-faces and delays speak louder than any forum defence or marketing spin.

So, The BIG Question: does Apple have a grand mysterious AI plan that will suddenly vault it to the front?

The evidence suggests not.

What it has, instead, is a belated, hard-fought plan to catch up – a plan that is still taking shape and, by Apple’s own admission, won’t fully materialise until at least 2026. Unless there’s a big acquisition by September 2025, latest.

In the meantime, 1 billion+ users are enjoying AI on their iPhones not because of Apple, but in spite of it – via third-party apps and services that Apple had no hand in creating and who are slowly enjoying being inside an ecosystem which runs on their Apple devices (and every other platform) but doesn’t require Apple to oversee in some nanny-state style of overly-invasive censorship or constraints the way it doesn’t do with Safari .

They’re also platform agnostic, and take your data and conversations with you, wherever you log in. No walled garden needed. That’s a bitter pill for a company that redefined personal computing devices.

None of this is to say “Apple is doomed” in AI; Apple has immense resources and talent, and when it finally focuses, it can achieve a lot. Perhaps by 2026 or 2027, Siri (or whatever it’s called by then) will wow us again, and Apple will tout how they “got it right.” After 17 years trying and wasting a lead unlike any other in technology.

But the past 15 years of Siri/AI history make one thing clear: Apple isn’t leading this revolution – and it hasn’t been for a very long time. In fact they’ve fallen flat on their faces through hubris and internal tribalism and. The mystique of Apple’s secret sauce doesn’t apply here.

As one former Siri engineer put it bluntly,

“They already have a second-to-market delay, but this feels way worse… Apple missed AI by a mile.”

In the grand narrative of tech, the company that once “thought different” about the future somehow failed to recognise one of the biggest tech paradigm shifts of our era until it was unavoidably in its face.

And bitch-slapped it several times to wake it up.

In the end, the “mystery” of Apple’s AI strategy turned out to be no mystery at all – just a 15-year long series of miscalculations, contradictions, and course corrections.

The faithful can cling to the belief that Apple simply operates on its own clock. But as the clock ticks, even some of Apple’s most ardent supporters have realised that the company best known for being ahead of the curve allowed itself to fall dramatically behind By 15 years.

Siri, Siri, why so serious? Perhaps because, somewhere in Cupertino, there’s an acute awareness that Apple had the AI future in its grasp over a decade ago – and let it slip away.

As Dag Kittlaus aptly said, reminiscing about Siri’s early promise, “Siri was the last thing Apple was first on.”

The challenge now is whether Apple can ever catch up, or if it will remain, in the AI realm at least, just another voice in the crowd, drowned out by louder, more exciting and less constrained LLMs.

Becaise let’s face it, who amongst us hasn’t licked a toad, drunk mushroom tea, dropped LSD or slammed tequila shots until we hallucinated ourselves into oblivion? If hallucinations prove anything, it’s that LLM’s are just as human as we are, and we should learn to live with them, because they’re mostly harmless and almost invariably incredibly useful all of the time.

So to answer the question: does Apple have a secret AI strategy?

No, it’s just been making shit up as it goes along for 15 years, and nothing’s changed. If that makes you feel secure and happy because it offers consistency, I’ve got some Unilever shares to sell you.

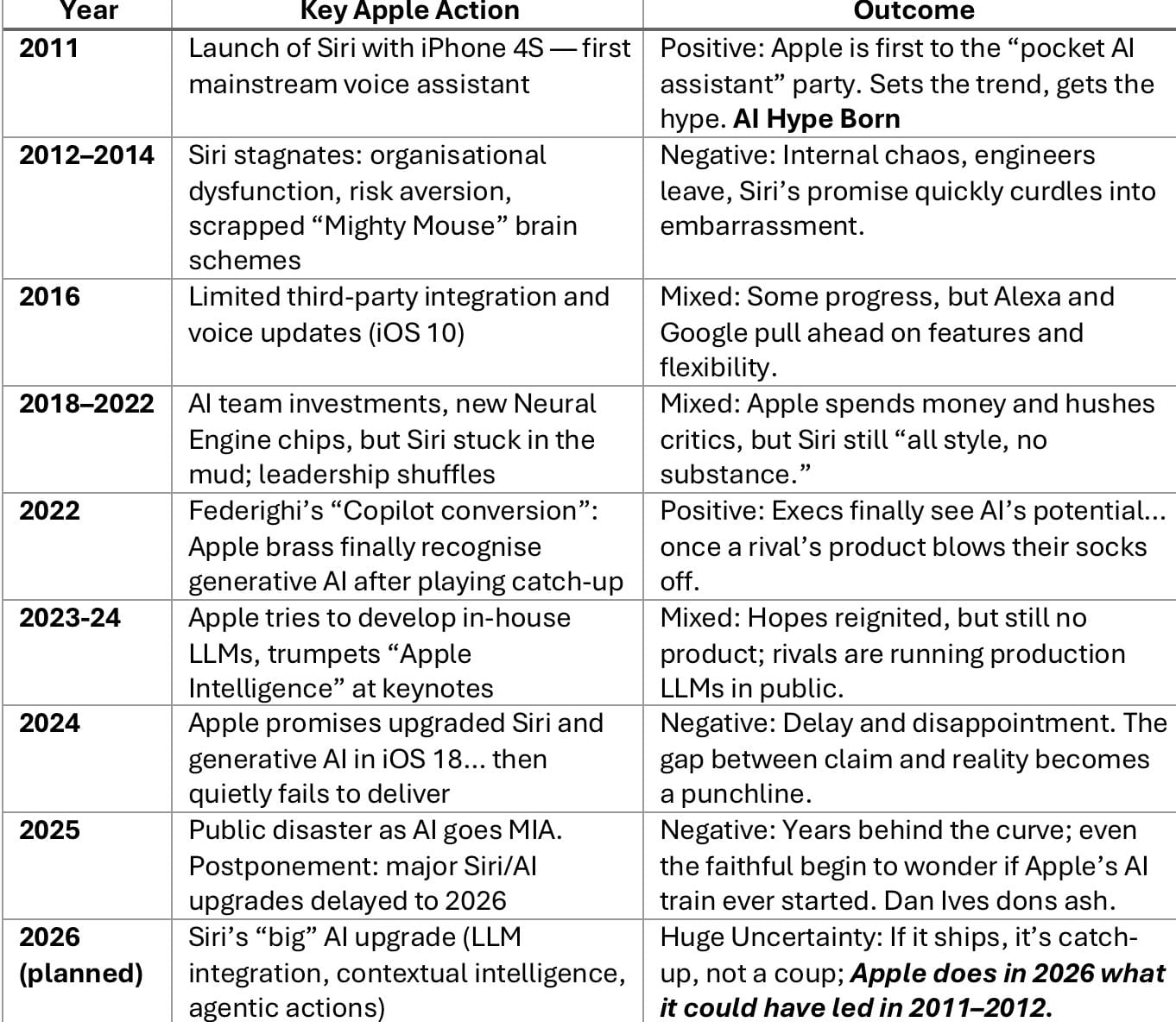

And as a final bonus, here’s the chart you might have been hoping for earlier on so you didn’t need to read through 8000 words to get here:

This table lays out, with all the subtlety of an iOS update notification, how Apple fumbled a fifteen-year headstart in digital assistants, keeping Siri locked in a walled garden—and in the process, transformed a potentially Perplexity-style platform into a perpetual “coming soon” slide.

Each year a fresh excuse, a new reorg, and a chorus of forum fans insisting the less Apple says, the deeper its genius must be.

Meanwhile, the real legacy is that Siri—once an agentic AI ahead of its time—became the tech world’s most visible example of a company squandering first-mover advantage by refusing to take risks, ship imperfect work, and let users (rather than PR and CGI) tell the story. Apple, it’s time to #FreeSiri

And don’t forget:

#FREESIRI … because it’s not Siri’s fault Apple’s done more U-turns than Brad Pitt in Apple’s Formula One: The Movie.

And How Apple could still hit $400 for Q4 2027 (if it can stop losing its top AI talent to Meta)

— Tommo, London, 31st July 2025 | X: @tommo_uk | Linkedin: Tommo UK