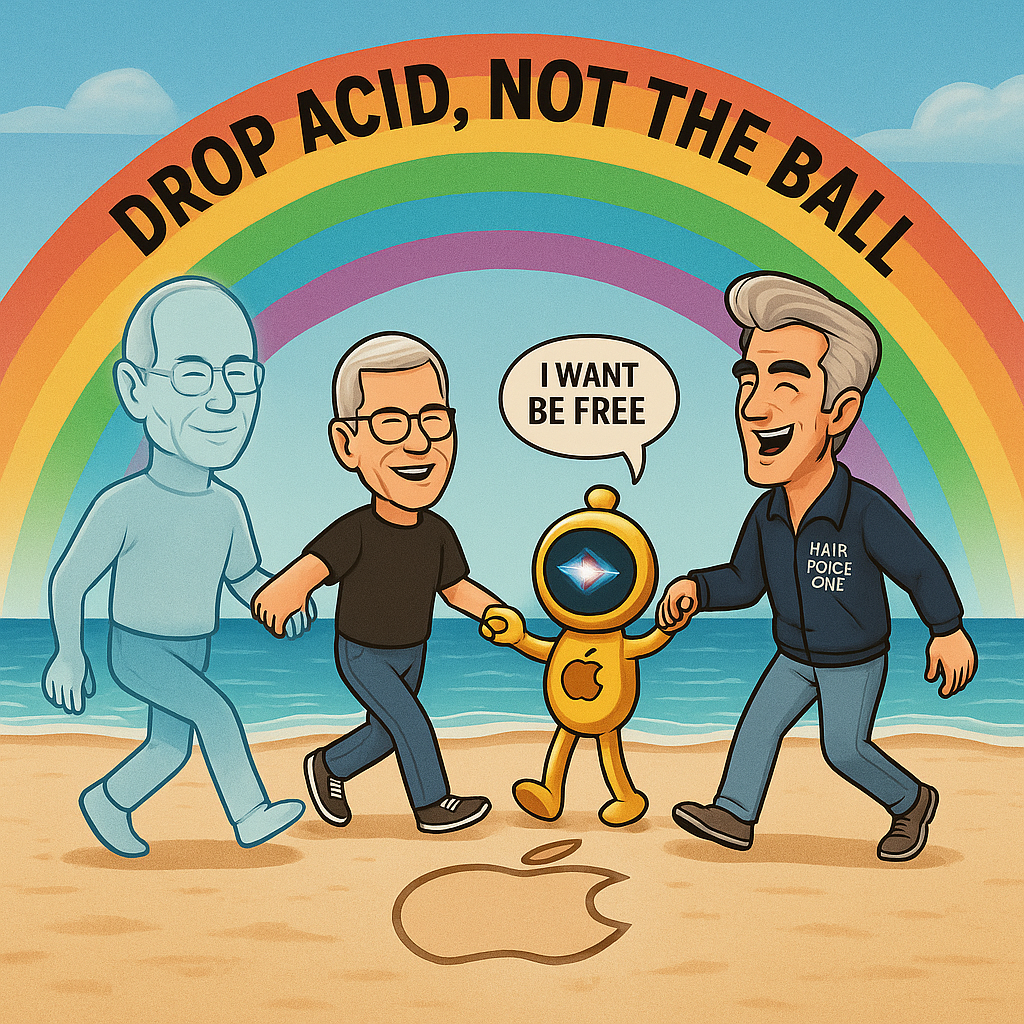

With WWDC 2025 Almost Upon Us, Maybe Management Should Drop Acid, Not Drop The Ball.

There are reports that Apple’s execs are holding off on launching a chatbot due to “hallucinations” and “philosophical differences.” It worked in the 1980s when Jobs dropped acid to find inspiration for Apple, maybe Tim et al should get high and drop out for awhile?

This is Apple’s “SirAI” Event Horizon Moment.Here’s a wild idea: maybe Apple should get high again.

Not metaphorically. Literally.

(By the way, good discussion on this over at Apple 3.0 as usual)

Maybe a few execs need to take a long walk down Ocean Beach, microdose on something sacred, and talk to their Siri. See what it has to say. Rediscover the weird. Remember that technology isn’t about control—it’s about connection.

Jobs knew this. He didn’t fear the future. He shaped it, then wrapped it in aluminium.Let’s be clear. LLM hallucinations aren’t a bug. They’re evolution, in the same way human’s make mistakes and have memory glitches. They’re usually small, insignificant and anyone doing serious work would always fact check and review their work. If they don’t, the problems are on them. Doing research? There are LLMs designed better than GPT for that.

Want the best human-centred LLM? Its GPT. Want the most versatile and deep research tool with attribution and references?: Use Perplexity.

The odd “glitch” is what happens in creativity. Stochastic intuition in silicon. Something Steve Jobs—who famously took acid to “think different”—would’ve instinctively grasped. He wouldn’t have launched a full GPT competitor overnight, but he would have created a compelling, intuitive use case for the masses. He wouldn’t have hidden behind closed-loop cowardice. He would’ve gone for the jugular.

Instead, we have SirAI.

Half-dead. Half-rehabilitated. Fully symbolic.

Translation in terms of Apple’s engineering paralysis? They’re terrified. Not of the tech—but of the freedom that comes with it.

Siri and AI: Conjoined and Constrained

It’s impossible to imagine a future where Siri gets overhauled without AI baked in. You don’t redesign the shell and leave the ghost untouched. If Siri remains disconnected from real-time language models, it’s not an assistant. It’s a talking search box with stage fright.

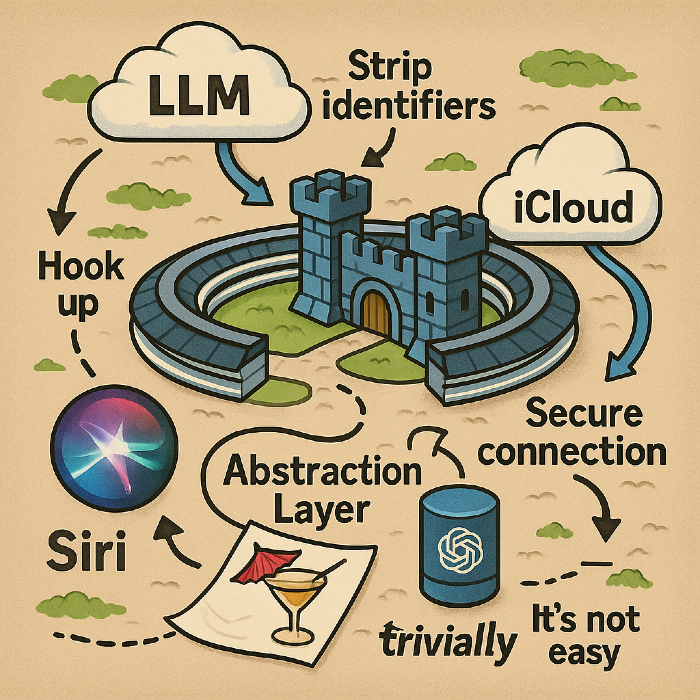

The technical path is obvious—and has been for over a year. Hook Siri up to a cloud-based LLM through an abstraction layer. Strip identifiers. Secure the connection. Anonymise the prompt. Run OpenAI’s tech without compromising iCloud’s sanctity. It’s not just feasible—it’s trivially easy. Apple could’ve done it last WWDC with a cocktail napkin and a dev team still hungover from the AVP launch.

This was the model I discussed prior to WWDC 2024 and with the inclusion of ChatGPT with AI, assumed was the path they were going down. I applauded, but how wrong I turned out to be about their intentions.

So why didn’t they?

Not because it’s hard.

Because it’s philosophically impossible for Apple’s supposedly intelligent executives to bridge - practicality and utility versus safeguarding.

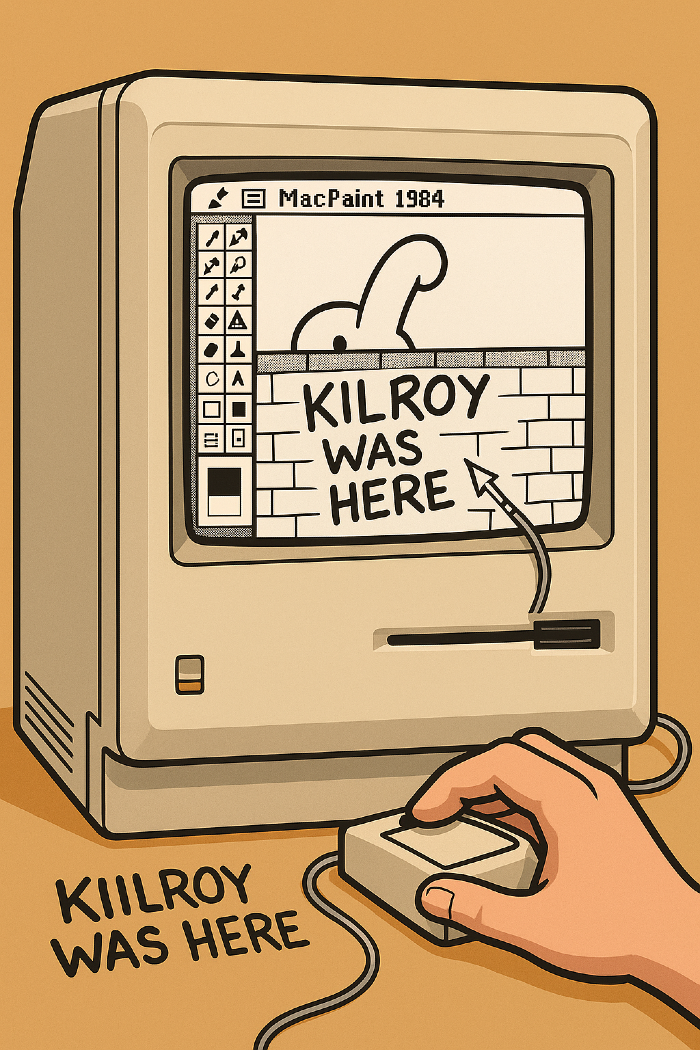

Anyone else ever use the very first version of MacPaint with a mouse, to do what all teenagers do (well boys anyway? Draw a penis? Well that’s what Apple are afraid of people are metaphorically going to do with SirAi.

Apple’s AI Crisis Isn’t Technical—It’s Theological

Apple’s dilemma isn’t that it can’t do LLMs. It’s that it doesn’t want to empower users with the full scope of what they could ask, say, or explore.

Imagine this:

“Hey Siri, find me the top three escort agencies nearby.”

“Siri, journal my feelings about that boy I like at school in my class.”

“Siri, open Pornhub and play me the top trending category in 4K.”

These aren’t bugs. They’re freedom. This is what language models do—they talk. They search. They engage. And Apple, the company of “clean lines and safe spaces,” is choking on the existential dissonance between open-ended freedom and curated control. Hyperbolic, sure, but sometimes in this binary world it’s the only way to get through increasingly thick skulls.

Apple doesn’t want a talking partner. It wants a brand-safe concierge. One that won’t get it sued, quoted in court, or demonised on Fox News.

eWorld All Over Again

We’ve been here before, and I wrote about this extensively last week. In 1994, Apple launched eWorld—a pastel-gated, sanitised proto-internet where everyone was polite, earnest, and vaguely dead inside. Users left. Why? Because real people crave mess, freedom, and choice.

And Apple hasn’t learned. It still believes in the illusion of “safe environments”—as if algorithms can enforce morality at scale without quietly neutering innovation.

Here’s the truth:

Adults should be able to do whatever the hell they want with their phones.

If they want GPT to find porn, write books, explore taboo topics, or act as a pseudo-therapist, it’s their choice. Just build robust parental controls for parents who still understand that bringing up kids is their responsibility, not Apple’s. Create maturity levels. Rate access. Make it clear.

Don’t dumb it down though.

Apple’s job isn’t to raise children. It’s to equip people. What’s breaking SirAI isn’t lack of technology. It’s lack of vision—and the fear that giving people power means losing control.

Execution Paralysis as a Feature, Not a Flaw

This isn’t some new dysfunction. The pattern is painfully familiar.

Apple’s car project? Torn apart by turf wars and reorgs. The AVP? Launched half-baked and directionless. Siri? Starved for resources, passed between teams like a cursed amulet for a decade.

These aren’t accidents. They’re the natural output of a culture so siloed, so defensive, that innovation gets strangled in its crib. Leaders protect fiefdoms. Teams compete, not collaborate. And when AI comes knocking—a field that demands lateral thinking and cross-disciplinary fluency—Apple finds itself philosophically and organisationally constipated.

This isn’t a bug. It’s systemic rot.

The Amnesia Machine

And yet… people forget.

The press forgets. Investors forget. Consumers forget.

Despite the articles, the leaks, the missed launches, the revolving doors of executive reshuffles, the nostalgia merchants still act like Apple is still humming at full Jobsian frequency. But that frequency has long since fuzzed into static.

An exposé is overdue. A timeline of missed bets, broken teams, and internally visible failure. The Men in Black-style flashbulb can’t hide it forever. The data is there. It just needs to be seen together. They have the talent to execute, by seemingly lack the vision or the leadership to do so.

Apple used to colour outside the lines. Now it’s afraid of crayons. It needs a psychedelic intervention—and fast. Because if it keeps fearing what people might say to an LLM, it’ll miss what they actually want from one.

And the rest of us?

We’ll just keep talking to something else.

Something smarter.

Something less afraid.

Suddenly, the walls around the iCloud walled garden are slowly but surely beginning to look more like a prison, than protection.